The Role of AI in Software Testing

A few months ago, Google revealed that AI in software testing cut defect leakage by nearly 40% in one of its large-scale enterprise applications. That’s 40% fewer bugs reaching production, saving costly rollbacks, protecting user trust, and giving you a real competitive edge.

If your competitors are utilizing AI in testing while you are still relying on traditional regression cycles, you are already at a disadvantage. Can you really compete in the CI/CD era?

Software development is moving faster than ever, and CI/CD pipelines demand instant validation — yet manual testing slows releases while scripted automation proves brittle, where a single broken locator can collapse the entire suite.

This is where AI steps in. It auto-heals locators, predicts flaky tests, and flags risky code changes — so testers spend less time firefighting and more time on creative, exploratory testing. Think of AI as your co-pilot, handling the repetitive, error-prone work while you focus on intuition, insight, and real problem-solving.

And while these gains are visible in daily testing tasks, the real transformation happens at a larger scale, across the entire Software Testing Life Cycle (STLC). So, how exactly does AI reshape STLC, and what should decision-makers like you know before adopting it?

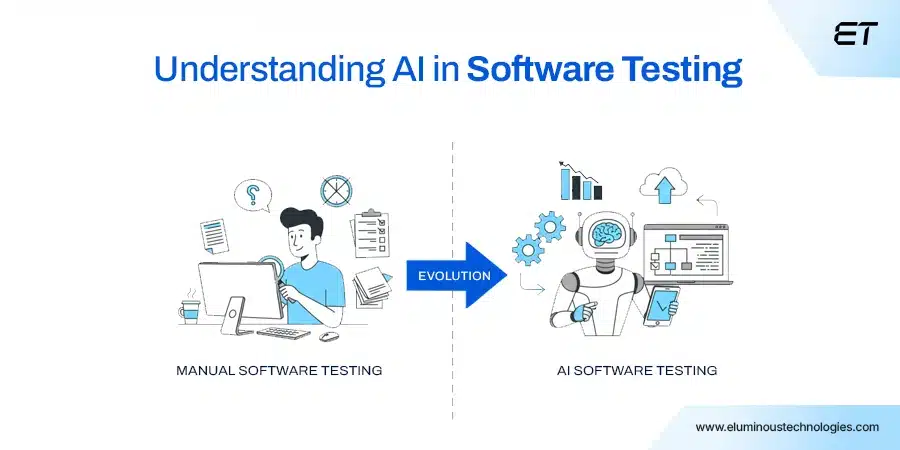

What is AI in Software Testing?

AI-powered testing brings the power of learning, reasoning, and pattern recognition. It goes beyond rigid scripts to create test systems that adapt on their own, predict risks, and even recover when things break.

Now, you might be wondering: isn’t automation already supposed to solve these problems? That’s a fair question.

The reality is, traditional automation has its limits. It executes what it is told, but it does not learn from past runs, predict future risks, and it surely does not heal itself when things break. AI changes that equation.

Here is a side-by-side comparison of AI in software testing vs. Manual Testing to make it clear:

| Aspect | Manual / Traditional Automation | AI-Powered Testing |

| Speed | Slow, repetitive execution | Adaptive, learns in real time |

| Coverage | Limited to predefined test cases | Dynamically expands with app behavior |

| Accuracy | Human error-prone, fragile locators | Self-healing scripts, reduced false positives |

| Scalability | Difficult to scale across large, complex systems | Learns patterns, scales seamlessly |

| Maintenance | High effort when UIs change | Auto-fixes locators, drastically reducing overhead |

Did you know? Enterprises that adopt AI strategically see faster project delivery compared to traditional methods. Want to explore how?

How AI in Software Testing Works

Leading organizations are investing in AI-driven quality engineering to accelerate release cycles, cut defect leakage, and improve test reliability at scale. But the critical question remains: how does AI in software testing actually work?

Machine Learning (ML)

Think of ML as that one QA team member who never forgets. It remembers every bug your system has ever had and uses that memory to predict where the next one might appear.

By analyzing code churn, complexity, and defect density, algorithms such as Random Forests and Neural Networks assign “risk scores” to different modules. The result? Your team knows exactly where to focus testing instead of spreading efforts thin.

Natural Language Processing (NLP)

How many times have business requirements been misunderstood in testing? NLP eliminates that pain. It takes plain English like “Login with an invalid password and check for the error message” and converts it into an executable test script.

Using techniques such as tokenization, intent recognition, and entity extraction, NLP lets business analysts and product managers create test cases directly, without writing code.

This bridges the gap between business and QA, saving both time and frustration.

Computer Vision

Automation might say “pass,” but your users still complain that a button is not visible or that the layout looks broken on mobile. That is where computer vision steps in.

By using CNNs and image-diff algorithms, AI checks screens pixel by pixel across devices. It validates that it “performs correctly” for the end user. In other words, it tests the experience, not just the code.

Generative AI in software testing is changing how CTOs plan, build, and scale software in 2025. Curious to know what else you might be missing?

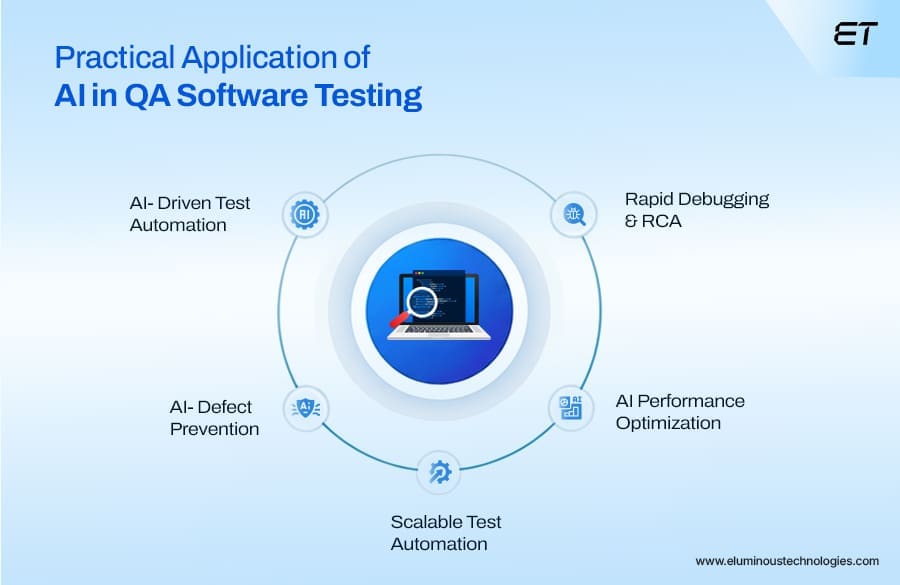

Key Use Cases and Applications of AI in Software Testing

The real value of AI in testing lies in its ability to drive business benefits.

Faster releases, reduced maintenance, higher coverage, and fewer defects in production all translate directly into cost savings and customer trust. Let’s explore where the biggest wins are happening.

Autonomous Test Case Generation

Traditional test design is time-consuming and often misses edge cases. With Generative AI in software testing, applications can be scanned for code changes, user flows, and behavioral patterns to automatically generate comprehensive test scenarios.

The benefit? Coverage expands without adding headcount. In fact, enterprises using autonomous generation report up to a 30% boost in test coverage within a single release cycle. It helps them catch risks earlier and improve release confidence.

Self-Healing Test Scripts

One of the biggest frustrations in QA is script maintenance. A minor UI change, like a renamed button, can break dozens of tests. Generative AI in software testing and self-healing scripts eliminates this overhead by detecting locator changes in real time and updating them automatically.

Organizations adopting this approach have seen maintenance efforts drop by 70–80%, freeing teams to focus on strategic testing rather than firefighting broken scripts.

Intelligent Test Prioritization and Optimization

Running every test for every build is simply unsustainable in fast-moving CI/CD pipelines. AI in software testing changes the equation by analyzing defect history, code diffs, and module risk scores to decide which tests truly matter.

Instead of spending two days on regression, teams can finish in less than a day while still catching the same number of defects. The advantage is clear: reduced cycle times, accelerated deployments, and no compromise on quality.

Predictive Defect Analytics

Imagine being able to fix a bug before it ever surfaces in testing. That is what predictive analytics brings to QA. By mining commit logs, developer activity, and historical defects, AI highlights modules with the highest likelihood of failure.

This proactive approach has helped organizations cut post-production defects by more than 28%, shifting QA from a reactive safety net into a forward-looking risk prevention strategy.

Synthetic Test Data Generation

Access to realistic, compliant test data has always been a bottleneck. AI resolves this by generating large-scale, privacy-compliant synthetic datasets that mimic production without exposing sensitive information.

For industries like healthcare and finance, this means no more waiting on masked datasets or risking compliance violations. Teams gain agility, with test cycles accelerated simply because the right data is always available when needed.

AI in staff augmentation is changing the way companies hire, match, and scale teams. Curious to see how it can reshape your hiring and delivery strategy?

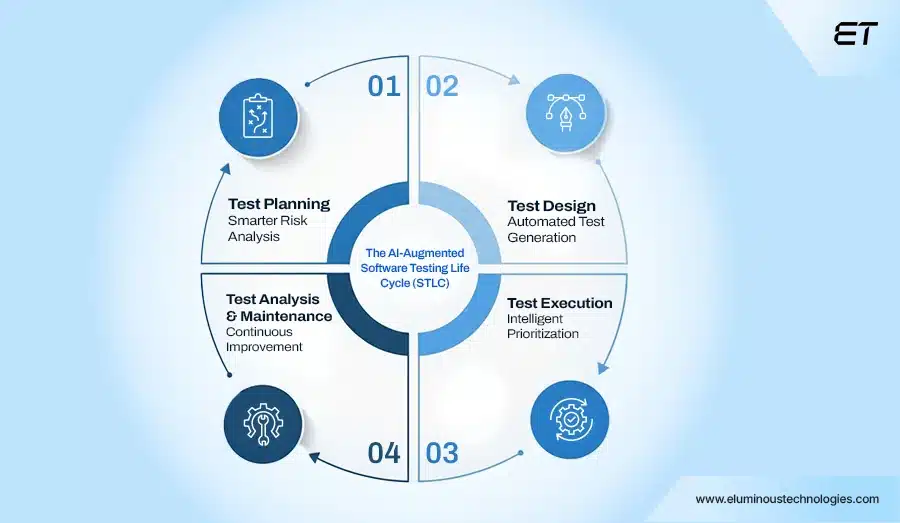

The AI-Augmented Software Testing Life Cycle (STLC)

Every software team knows the cycle: plan, design, execute, maintain. But in practice, this cycle slows down under pressure.

Why? Because too many test cases = too much noise, and not enough focus.

AI redefines the Software Testing Life Cycle by shifting it from a manual, reactive process to an intelligent, outcome-driven framework.

1. Test Planning – Smarter Risk Analysis

Instead of relying on assumptions, generative AI in software testing shows you exactly where to focus first.

- Identifies high-risk modules → based on historical defects and code complexity.

- Provides accurate effort & timeline estimations using predictive analytics.

- Ensures business-critical coverage instead of blanket testing.

In short, AI makes planning specific and actionable by prioritizing high-risk modules, aligning timelines to real data, and guaranteeing that critical business functions never go untested.

2. Test Design – Automated Test Generation

What usually takes weeks to design, AI in software test automation can create in hours with better accuracy.

- Automatically generates test cases from requirements.

- Creates synthetic yet compliant datasets for realistic testing.

- Removes redundancies → while ensuring strong edge case coverage.

This means that test design delivers higher coverage in less time, with AI producing compliant datasets, reducing human error, and uncovering edge cases that traditional methods often miss.

3. Test Execution – Intelligent Prioritization

AI makes sure the right tests run at the right time, cutting wasted effort.

- Runs critical tests first based on commit history and risks.

- Balances workloads across multiple environments for speed.

- Dynamically adapts when failures are environment-driven.

The result is measurable acceleration. Which means faster execution cycles, optimized resource use across environments, and defect detection aligned with real risk rather than blind sequencing.

4. Test Analysis & Maintenance – Continuous Improvement

Instead of testers chasing broken scripts, AI in software test automation quietly keeps the suite healthy.

- Performs root cause analysis → linking logs, stack traces, and commits.

- Applies self-healing automation to update scripts automatically.

- Predicts defect clusters early, reducing production risks.

This transforms analysis into proactive risk prevention — with AI-driven scripts in real-time, connecting failures back to their source, and forecasting defect clusters before they reach production.

Agentic AI frameworks simplify how enterprises build autonomous agents and speed up complex workflows.

Wrapping Up

For enterprises, the role of AI in software testing is no longer optional but is fast becoming essential. By enabling autonomous test creation, self-healing scripts, and predictive defect analytics, AI transforms QA into a strategic lever for business growth.

Yet, adopting AI in software testing is not just about deploying advanced technology; it requires a strategic shift. Forward-looking enterprises are already redefining quality assurance as a competitive differentiator, while those clinging to traditional methods risk slower delivery and higher defect leakage.

The real question is, will your enterprise take the lead in adopting intelligent QA, or will it be left reacting while others set the standard?

Overwhelmed by manual processes? Automate smarter with enterprise-grade AI development