The advent of big data is responsible for initiating various vital activities. Terms like web extraction, behavioral analytics, cascading, and flume are gaining popularity.

By 2025, experts predict that the global data volume can reach a whopping 181 zettabyte mark. To leverage such an enormous amount of information, companies need to implement techniques like web scraping.

This way, it becomes possible to stay ahead of potential competitors and plan operational activities profoundly. But before exploring terms like local scraper, data aggregation, and report mining, it is crucial to know the basics of web scraping.

Without any ado, scroll below and note the fundamentals of this data-centric technique. The information in this blog can set a solid foundation to capitalize on this modern automated process.

The ABCs of Web Scraping

If you want to know the definition of this technique, here it is:

‘Web scraping is a manual or automated process of extracting large amounts of structured data from online websites or web pages.’

Now, if you want to understand this concept in simple words, read these uncomplicated pointers:

- Consider that you want to analyze some data on customer behavior

- You refer to various blogs that belong to your domain

- After exploring such blogs, you want to compile the data in a systematic manner

- A scraper tool helps you in this process by collecting and exporting this data

- You can save this information in the form of a spreadsheet, API, database, or any convenient configuration

The best language for web scraping can help you perform the data extraction process seamlessly. Python, PHP, C++, JavaScript, and Ruby are some valuable suggestions for conducting the web scraping activity.

The Multiple Utility of Web Scraping

This data extraction process is valuable for modern corporates. The following application areas will testify to its significance. Consequently, you will need to use a suitable scraper tool for your next data analysis project.

Table: Application Areas of Web Scraping

| Domain | Explanation |

| Market Research | Data analysts can use this technique for trend analysis, competitor surveys, R&D, and other assessments |

| Real Estate | Web scrapers 8help retrieve data on properties, listings, monthly rental income, and other resources |

| Brand Monitoring | Data extraction is useful for gathering information on public opinions, preferences, and a brand’s perception |

| News and Content marketing | Web scraping can prove useful in collecting data on various industries, public sentiments, and other crucial factors |

| Price Intelligence | This technology is primarily helpful for ecommerce businesses to track the prices, discounts, and offers of their competitors |

| Machine Learning | Web scraping can assist in collecting large datasets for training machine learning models, image recognition, and sentiment analysis language |

The Working of Web Scraping Process Explained

Before choosing the best language for web scraping, it is crucial to understand the primary working process. Here are the generic steps that most experts follow:

- First, you need to identify the website for data extraction

- The next step comprises collecting URLs of the web pages

- Use a scraper tool to make an HTTP request and get the HTML code

- Utilize apt locators to find relevant data in the Hypertext Markup Language

- Save the information in JSV, XLS, CSV, or any other relevant format

These five steps become possible due to two major components – web crawler and scraper. What do these tools do? Find out in the subsequent section.

-

Web Crawler

The alternate name for this web scraping component is ‘spider.’ This is an artificial intelligence (AI) tool that searches relevant content. (Just like a spider crawling on various surfaces).

Depending on your requirements, a web crawler can index a website or the entire internet. Consequently, you need to pass the indexed data to the scraper.

-

Web Scraper

The scraper tool is crucial in the web scraping extraction process. They have data locators that play a vital role in deriving information from the HTML code.

Scrapy, Apify, Octoparse, and Diffbot are prominent web scraping tools in the market.

Categories of Web Scraping Tools

You should be aware of the available options to use a suitable scraper tool. So, refer to this table to understand the alternatives for each scraping tool category.

Table: Types of Scraping Tools

| Tool Category | Explanation |

| Browser Extensions | Create sitemap detailing and data extraction commands |

| Cloud Scraper Tools | Offer advanced features and run on off-site servers |

| UI Web Scrapers | They render complete websites and have an ergonomic user interface |

| Self-built Scrapers | Programmers can create customized crawlers using knowledge of the best language for web scraping, like Python |

You can use the following Python tools and libraries to create self-built scrapers:

- Scrapy Framework

- Urllib

- Requests

- BeautifulSoup

- Lxml

- Selenium

- MechanicalSoup

- JSON, CSV, and XML Libraries

- Celery

- Regex

Overall, once you have a sophisticated scraper tool, it is possible to perform efficient data extraction at your convenience.

Note: If you select Python for web scraping, choose Scrapy, Selenium, or Beautifulsoup.

Bonus Section: Using Scrapy for Web Scraping

If you are conversant with the basics, try this basic tutorial with the tool Scrapy. This scraper tool is a high-level framework useful for data mining, automated testing, and extracting structured data.

Step 1: Use Python, one of the best languages for web scraping. This initial step involves installing scrapy.

Command: pip(package-management system)

pip install scrapyStep 2: Create a new Scrapy project

Command: scrapy startproject projectname

scrapy startproject scrapyprojectUse the below command to receive help on this topic:

scrapy --helpNote: It is crucial to understand that the project name and spider name cannot be the same

Step 3: This step involves the creation of web crawler(s). To begin, you need to change the directory using the following command:

cd scrapyprojectYou can then create more than one spider. For this purpose, follow this code:

Command: genspider

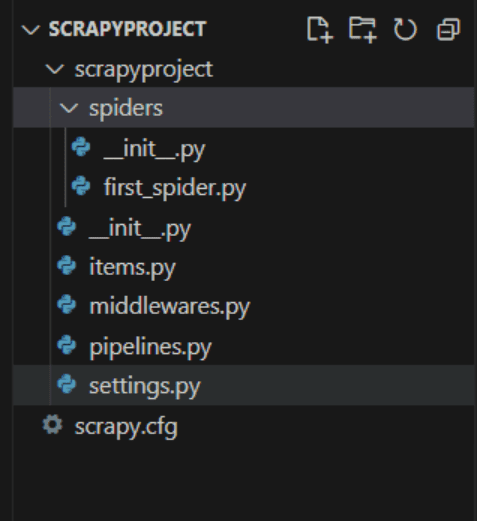

scrapy genspider spider_name website_nameThe above image displays the creation of a Scrapy project using appropriate spiders. You can also use the following commands to perform specific functions:

Table: Some Scrapy Project Commands

| Name | Utility |

| /spiders/first_spider.py | Implementation of data extraction logic |

| /items.py | Spiders can return extracted data as items |

| /middlewares.py | Two middlewares are available in this module – SpiderMiddleware and DownloadMiddleware |

| /pipelines.py | To clean text and connect with the desired output. It is also useful to pass data in the database |

| /settings.py | To customize behavior of Scrapy components |

| /scrapy.cfg | For eggifying the project and uploading it to Scrapyd |

Useful Guidelines for Effective Web Scraping

Whether you use a local scraper or hire skilled data professionals, some best practices remain constant. This section mentions the top web scraping pointers that data analysts and extractors should follow.

-

Check Website Robots

Before web crawling, you should check the robots.txt of a website. This file is present in the backend of a website’s root directory.

The primary purpose of robots is to instruct spiders about the crawling sequence. The website administrator installs robots (a text file) to define the pages that a spider can scrape.

-

Use a Proxy

Always use proxies to route your web scraping requests. This way, you can know the login requests and the maximum threshold of a website.

You can use a proxy in Scrapy by creating custom middleware or httpproxymiddleware.

-

Adjust the Crawl Rate of Your Scraper Tool

A local scraper or human can be slow while indexing websites. On the other hand, web crawlers or bots are efficient. So, an anti-scraping mechanism can track this activity, leading to the blocking of your IP.

The solution to this challenge is varying the speed of your spiders and matching them with human crawlers.

-

Change the Crawling Pattern

Web crawlers’ efficient and fast speeds can become predictable after a particular time. Resultantly, anti-web scraping technology can detect identical crawling patterns and block your bot.

So, using random crawling patterns can prevent such a scenario and improve the effectiveness of your web scraping activity.

The Common Challenges in Web Scraping That You Should Know

You can use the best local scraper for data extraction purposes. Still, in some cases, the effectiveness of your process can seem less. If you notice such an unwanted scenario, you must face the classic case of well-known data scraping challenges.

This section discusses some of the most prevalent challenges and their simple solutions.

-

The Complexity of the Website Structure

A scraper tool can make it difficult to crawl complex websites or web portals. Some organizations install anti-scraping mechanisms and purposefully build intricate architecture.

So, what is the solution to this challenge? You need a proper understanding of the web scraping process and analyze the situation carefully.

Solution

First, parse the HTML structure of the website and locate the elements accurately. For this purpose, you can use tools like XPath or BeautifulSoup. Further, you can also use Regular Expressions to extract the required information.

-

IP Blocking and Captcha

Website owners have become smart. They do not want web scrapers to extract data without hassle. So, they install features like a captcha and block the IP address of a local scraper to prevent automated scraping.

Solution

You can use proxy servers and IP rotation to use your scraper tool best. Also, if legally permissible, techniques like captcha solver can prove effective.

-

Existence of Dynamic Content

Data extraction of static websites is simple. However, these days, you can come across several sites that use JavaScript or AJAX to create dynamic content. In this scenario, scraping the desired information in simple steps becomes difficult.

Solution

To render dynamic content, you can use browsers like Puppeteer or Selenium. Also, it is an excellent tactic to monitor network traffic and spot API endpoints or XHR requests.

-

Rate Limiting

Some websites have a mechanism that limits the number of requests. This activity prevents excessive web scraping from ensuring the safeguarding of data. So, is there a solution to this challenge? In a word, yes.

Solution

You can comply with the rate limits by planning delays between scraping requests. Exponential backoff is useful for adjusting such intervals based on server responses. In addition, it is also possible to increase the scraping capacity by using multiple IP addresses.

-

Violation of IP and Terms of Service

Data extraction can have legal implications. So, you should take appropriate measures to prevent the violation of the terms and conditions of a website.

Solution

Adherence to the website’s scraping policy is the best solution to this challenge. You should also follow the robots.txt file and take legal advice from suitable experts without fail.

-

Incomplete and Inconsistent Website Data

Not all websites have good structuring of data. You can find a website with valuable content, but the information can be inconsistent. In such a case, the web scraping activity can face evident hurdles.

Solution

You can apply data cleansing and normalization to eliminate the inconsistencies. To structure the data, you can also use a library like Pandas. Using regular expressions and string manipulation are standard solutions to solve this challenge.

In a Nutshell

If you want to perform web scraping, it is crucial to understand the fundamentals of this process. Simply put, this technique uses a scraper tool to extract data from the internet.

You can use this data for analyzing competitors, consumer sentiment, price analysis, and other crucial activities. Choosing the right tool and implementing the best web scraping practices are vital decisions before performing this process.

However, it is crucial to respect the robots.txt file and skip extracting restricted website data during this activity. For instance, course modules, research information, and private details of employees should not fall under your scraping process.

To receive more detailed guidance on this data extraction technique, you can contact eLuminous Technologies. Our dedicated team of experts can suggest the right tools and consult on safe web scraping practices for your business.

Frequently Asked Questions

1. Why should I use Python for web scraping?

Some developers feel that Python is the best language for web scraping. The reasons are a vast range of libraries, quick code execution, a large Python community, and the absence of unnecessary syntax symbols.

2. What are some popular websites that support scraping?

Wikipedia, Toscrape, Reddit, and Scrapethissite are good options for implementing your web scraping skills. You can use tools like Diffbot, Grepsr, or Octoparse to perform seamless data extraction activities.

3. Which well-known programming languages are easy to support web scraping?

If you are a beginner, knowledge of Ruby or Python is essential to begin website scraping. A good grasp of coding and know-how of an excellent local scraper are prerequisites to initiating this vital data analysis process.

Avdhoot is a fervent technical writer who aims to create valuable content that offers detailed insights to readers. He believes in utilizing various elements that make the content original, readable, and comprehensible. You can witness him indulging in sports, photography or enjoying a long drive on the city’s outskirts when not writing.