A Complete Guide to AI Tech Stack: Everything You Need to Know

Artificial intelligence (AI) has touched upon every industry, re-inventing them completely. It ushered in unmatched capabilities making it now a necessity. Building these AI solutions, however, requires a strong foundation, and that’s what brings us to an AI tech stack.

In this blog, we’ll explore the various layers and stages of the same to help you get started on your AI journey. So, read on to understand how to pick the right AI tech stack for your business.

What is an AI Tech Stack?

An AI tech stack is the vast set of tools, libraries, and frameworks necessary for the development, deployment, and maintenance of an AI app. It comprises many layers, each serving a specific purpose that together with the rest, creates a functional system.

When customized to meet the specific requirements of AI and Machine Learning projects, they help bring consistency, scalability, and efficiency. Additionally, they eliminate the need to integrate diverse tools and technologies.

Looking to leverage AI to make accurate business predictions?

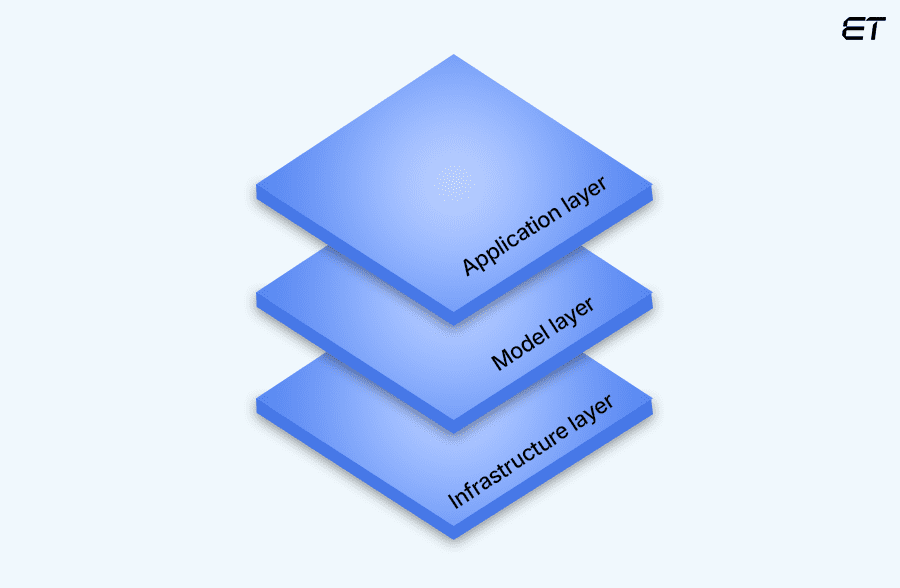

Layers in AI Tech Stack

The layers in an AI tech stack are interconnected, each building on the other to provide a powerful AI solution. Let’s understand this in detail.

1. Application Layer

It is the topmost layer of the AI tech stack, where end-users interact with the interface. Simply put, it is the access point that familiarises folks with the provided AI capabilities. It consists of various tools and frameworks to deliver a seamless user experience.

The key elements in this layer are as follows:

- User interfaces (UI): It includes the development of dashboards and visualization tools that make AI insights accessible to end-users.

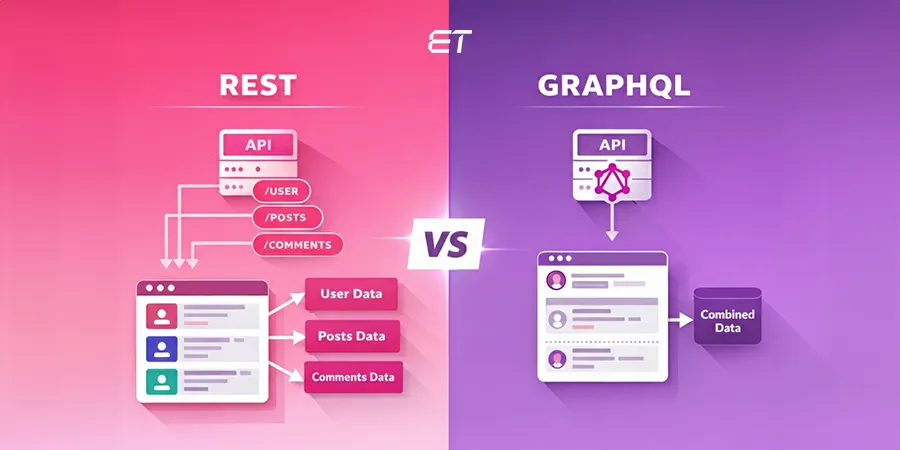

- API gateways: It is the middleware that facilitates interaction and integration across components.

- Frontend frameworks: Tools such as Angular, React, and Vue.js are typically used for building responsive UIs.

- Backend services: Involve server-side components that deal with user inputs, processing the data before it is sent to AI models.

2. Modeling Layer

In this layer data gets pre-processed, and AI models get developed, trained, and optimized. It works by receiving data from users, performing computations on the same, and delivering insights. It involves selecting the right model architectures and optimizing them for efficiency.

The primary components in this layer include:

- Development frameworks: It relies on TensorFlow, Keras, Scikit-Learn, and PyTorch libraries for pre-built functions, algorithms, and neural network architectures, for model development.

- Hyperparameter tuning: Model performance gets optimized by fine-tuning hyperparameters using Optuna and Hyperopt.

- Model evaluation: Run to calculate the accuracy, recall, and other performance metrics of AI models.

3. Infrastructure Layer

It delivers the much-needed computational resources, storage options, and deployment mechanisms for the functioning of an AI model. In other words, it can be said to be the foundation of the stack that handles the heavy lifting of data processing and model training.

The layer comprises the following elements:

- Computational resources: It deals with hardware such as CPUs, GPUs, and TPUs and cloud services such as AWS, Google Cloud, and Azure.

- Data storage: Scalable storage options like data lakes, databases, and distributed file systems help avoid latency by managing the vast volumes of data that are needed for training and prediction.

- Deployment platforms: Includes the platforms and tools that make implementing AI models as scalable services possible. For instance, Docker, TensorFlow Serving, and Kubernetes have built-in latency and fault tolerance.

- Monitoring and management: Comprises of tools that support tracking the performance and lifecycle of deployed models, like Prometheus, Grafana, and MLflow.

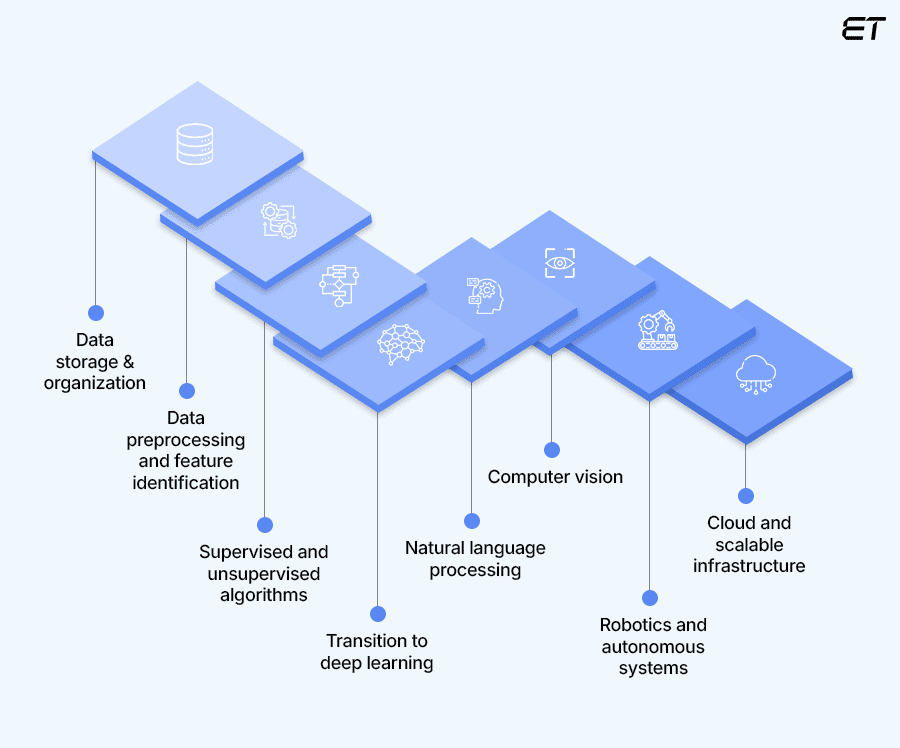

Stages of AI Tech Stack

The development and deployment of AI systems happen in primarily two key stages to ensure organized progress and swift redressal of bottlenecks. Each phase is supported by different components of the tech stack. Let’s understand this better.

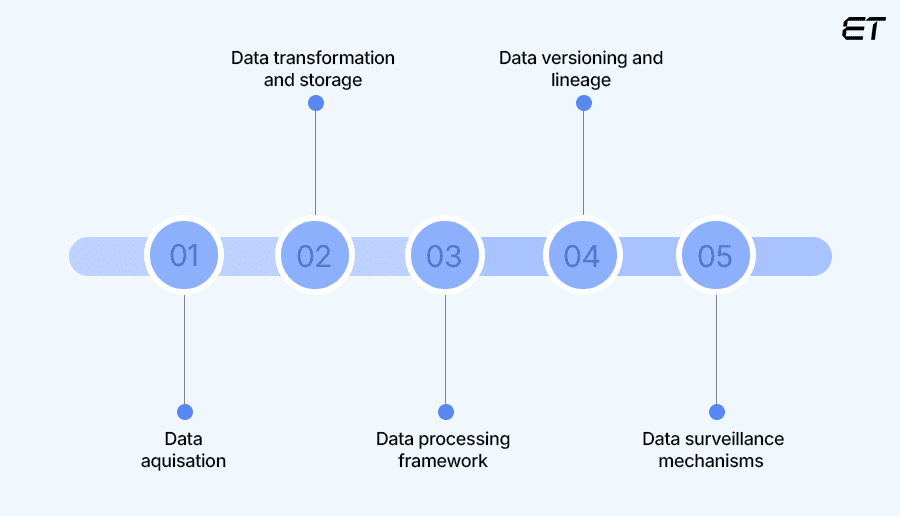

1. Data Management Infrastructure

This phase deals with the collection, structuring, storing, and processing of data to prepare it for analysis and model training.

Stage 1: Data Acquisition

It involves gathering raw data via tools like Amazon S3 and Google Cloud Storage to create datasets needed for the development of an AI solution. Thereafter, it is labeled for supervised machine learning. A perfect balance of automating the process, backed by careful manual verification provides the best results.

Stage 2: Data Transformation and Storage

By using Extract, Transform, Load (ETL), the data subsequently gets refined before storage, while reverse ETL helps synchronize data storage with end-user interfaces. Extract, Load, Transform (ELT), on the other hand, transforms it after storage.

Thereafter, it can be kept away in data lakes/warehouses. This is where cloud solutions come in.

Stage 3: Data Processing

With your data now ready, it can be processed into consumable formats using libraries like NumPy and Pandas. Apache Spark can prove handy in managing the same.

Additionally, feature stores like Iguazio, Tecton, and Feast can be leveraged for feature management.

Stage 4: Data Versioning and Lineage

In this stage, your data gets versioned. This can be done with the help of DVC (data version control) and Git. Furthermore, to trace the data lineage, you can rely on Pachyderm as it provides a comprehensive data history.

Stage 5: Data Monitoring

Once your product goes live, it will require regular upkeep to maintain data accuracy and prolong the lifecycle. Platforms such as Prometheus and Grafana are typically used to monitor performance.

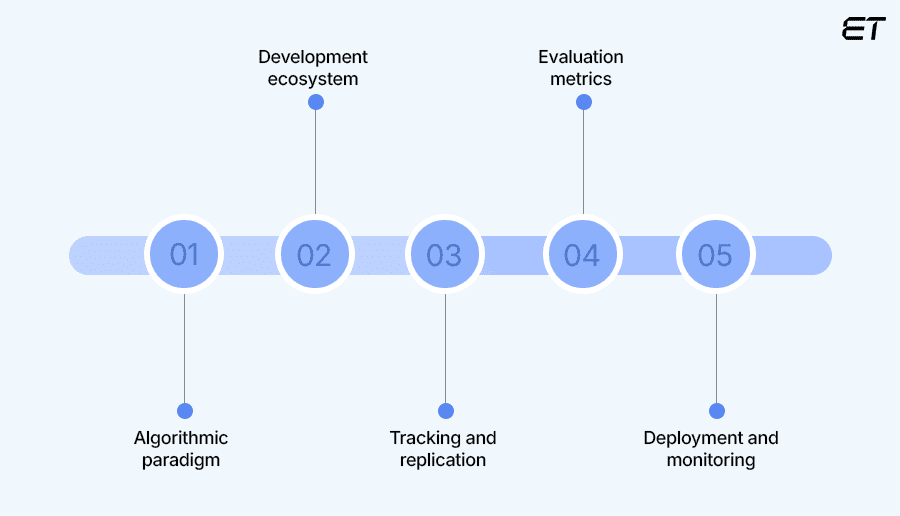

2. Model Architecting and Performance Metrics

This stage involves selecting the right deep learning and machine learning algorithms and training models to teach the model to recognize patterns and make predictions.

Stage 1: Algorithmic Paradigm

Consider from the pool of machine learning libraries such as TensorFlow, PyTorch, Scikit-Learn, and MXNET, carefully analyzing computational speed, ease of use, associated costs, community support, and other such metrics.

Stage 2: Development Ecosystem

Next, you would want to choose an integrated development environment (IDE) as it boasts diversified options for editing, debugging, and compiling code.

Platforms such as Jupyter and Spyder prove particularly helpful for prototyping.

Stage 3: Tracking and Replication

This stage involves leveraging specialized tools designed to streamline the AI workflows. MLFlow, Neptune, and Weights & Biases for instance can allow your team to log the metrics and outputs of each experiment, providing a clear audit trail of the development process. This is crucial for both debugging and iterating on models, as well as for compliance and reproducibility.

Meanwhile, containers such as Docker and orchestration tools like Kubernetes help in ensuring that the environment in which a model was developed and tested, gets replicated exactly in production.

Stage 4: Evaluation Metrics

Once your product is live, compare numerous trial outcomes and data categories. Rely on automation tools such as Comet, Evidently AI, and

Censius to ensure repeatability in assessing data quality degradation or model deviations.

How to Choose the Perfect AI Tech Stack for Your Business?

Selecting the right AI tech stack for your business is key to ensuring seamless operations and maintaining competitiveness. So, make an informed decision by evaluating the following metrics.

1. Understanding Your Needs: Begin by understanding your company’s goals and AI requirements to select stack components that align with your targets.

2. Cost-Effectiveness: Pick tools that fit your budget constraints. Cloud-based solutions typically provide the most cost-effective.

3. Scalability: In today’s competitive world, you must choose a stack that can scale as your business grows. Cloud platforms prove indispensable in this regard too for their ability to scale resources on demand.

4. Security and Compliance: Next, prioritize stacks that offer robust security features and guarantee compliance with market regulations.

5. Expertise and Support: Lastly, consider the availability of support for the components you choose. For instance, open-source tools like TensorFlow and PyTorch have large communities that offer assistance.

Ready to embrace AI to see your brand thrive? Well, turn your vision into reality

To Sum Up!

Irrespective of whether you’re developing a simple AI solution or a complex machine learning system, a well-designed tech stack is key to success. By choosing the right set of tools, your job is already halfway complete.

However, this is easier said than done, requiring meticulous planning instead. We hope our checklist of essential parameters will prove handy in this regard, helping you select the right AI tech stack for your business.

But, if you still find yourself unsure as to where to begin, partnering with our team of experts can give you the competitive edge you’re striving for in launching your AI-powered solution.

Frequently Asked Questions

1. How to build an AI tech stack?

To build an AI tech stack, first, mark out your project goals and budget, and then pick tools accordingly. Begin by setting up a reliable data infrastructure for processing and storage. Next, choose frameworks for the deployment of your model. Add experiment tracking Additionally, factor in scalability at this point. Lastly, use automation tools to continuously monitor and maintain the model.

2. What are some of the popular machine learning frameworks used in the development of AI tech stacks?

An AI tech stack utilizes machine learning frameworks for its predictive analytics— converts raw data into actionable insights through complex algorithms. Some of the most employed frameworks include TensorFlow, PyTorch, Scikit-Learn, Spark ML, Torch, and Keras.