How Does RAG Work? Your Key to Reliable Enterprise AI

- RAG combines LLMs with real-time data to give you accurate, up-to-date answers.

- It addresses the issue of outdated or incorrect AI responses by retrieving trusted information as needed.

- The RAG system comprises a vector database, retriever, generator, and orchestrator that work together.

- RAG cuts your costs, reduces errors, and removes the need for frequent model retraining.

- Top companies, such as IBM and Salesforce, utilize RAG to develop reliable and transparent AI systems.

- RAG is a smarter choice for your business, providing you with the AI you can trust.

Ask your company’s AI assistant a complex question, like, “What’s our latest policy on hybrid work arrangements?” Instead of making up an answer or relying on outdated data, it pulls the exact policy from your internal knowledge base and explains it to you clearly.

This isn’t magic, so how do you think it happens? The correct answer is Retrieval-Augmented Generation, or RAG.

When we talk about artificial intelligence, large language models (LLMs) are powerful but imperfect. What’s their main limitation? They only know what you train them on. This means they might lack current knowledge or business-specific data, or worse, get things wrong altogether.

RAG architecture is designed to solve this problem. Are you a decision-maker? If so, exploring ways to make AI more accurate, reliable, and grounded in your data, understanding how RAG works, is a smart place to start.

Why Traditional LLMs Aren’t Enough Anymore

Most large language models, such as ChatGPT, PaLM, or Claude, are trained on massive amounts of data, but have a static knowledge cutoff. They can generate impressive text but often suffer from what’s known as LLM hallucinations, confident answers that are entirely wrong.

These hallucinations pose a risk, as even a single factual error in a legal, financial, or medical scenario can lead to serious consequences. Thinking of continuously re-training large models with your updated company data? Well, that’s expensive, time-consuming, and not scalable.

This is where retrieval-augmented generation changes the game.

Turn your bold ideas into an AI-powered reality with our AI experts today!

What is Retrieval-Augmented Generation (RAG)?

RAG is a method that combines a language model’s ability to generate responses with a retriever’s ability to fetch relevant information from external data sources. Think of it as pairing a great writer (the LLM) with an expert researcher (the retriever). The model doesn’t just guess, it looks things up before answering your question.

In simple terms, RAG is like giving your AI an open book during a test. Instead of trying to recall everything from memory, it searches your enterprise knowledge base, documentation, or latest reports in real time, then uses that data to generate a precise and context-aware response.

Understanding the RAG Architecture

Let’s start with understanding the components that make up RAG architecture. However, before we move on to the detailed explanation of each component, let’s glance at a quick summary of each core component of RAG, in a nutshell.

| Component | Purpose |

| Vector DB | Stores document embeddings for fast semantic search |

| Retriever | Finds contextually relevant content using vector search |

| Generator | Produces the final response based on the query + retrieved data |

| Orchestrator | Manages the entire pipeline from input to output |

1. The Vector Database

Before RAG can retrieve anything, it needs a knowledge base. This knowledge base can refer to a collection of documents, reports, manuals, FAQs, or any business-specific content you want the model to reference.

But instead of storing this as plain text, RAG converts each document into a vector (a numerical representation of the document’s meaning). This process is called embedding.

Embeddings are created using pre-trained models, such as OpenAI’s text-embedding-ada-002 or Sentence-BERT.

This results in a high-dimensional vector that captures the semantic meaning of the text. These vectors are stored in a vector database like:

Reading this, a question might come to your mind—Why is it stored in the form of vectors and not plain text?

Because instead of looking for exact keywords, the system searches for their meaning. This enables semantic search, finding relevant content even if it doesn’t use the exact words in the question.

2. Retriever

When a user asks a question, for example, “What’s the current parental leave policy?”, the system doesn’t guess. Instead, the retriever takes that query, converts it into a vector, and looks for the most similar documents from the vector database. Let’s see how it functions.

-

-

- It uses cosine similarity or the dot product to match the question vector with document vectors.

- This step typically retrieves the top k results (e.g., 3–5 chunks of text) that are most relevant to the query.

- Then the retriever ensures that only the most contextually relevant pieces of information reach the next step.

-

3. Augmented Prompt

Once the retriever finds the most relevant chunks of data, the system creates an augmented prompt. This is like preparing a cheat sheet for the LLM.

-

-

- It includes the original user query.

- Plus, the retrieved text passages.

- Optionally, an instruction like: “Answer the question using only the information below.”

-

Here’s a quick example of how an augmented prompt might look:

Question: What is our company’s remote work policy?

Relevant Documents:

1. Remote work is allowed up to three days a week for full-time employees.

2. Employees must notify their manager at least 24 hours before working remotely.

Please provide a concise answer based on the above information.to build this logic efficiently and securely.

This augmented prompt is then sent to the LLM.

4. Generator (LLM)

The language model, e.g., GPT-4, Claude, PaLM 2, takes the augmented prompt and generates a natural language response.

Because it’s working with real, retrieved documents, the LLM doesn’t have to guess or make up facts. Instead, it uses the retrieved context to produce an accurate, fluent answer.

Bonus Tip: You can also ask the model to cite sources or explain which document it used, boosting transparency and trust in AI-generated content.

5. Orchestrator

Behind all these steps is the orchestrator, which coordinates all these flows:

-

-

- It receives the query

- Calls the retriever

- Assembles the augmented prompt

- Sends it to the LLM

- Delivers the final response

-

To build this logic efficiently and securely, you can use tools like LangChain, LlamaIndex, or Haystack.

Want to learn how AI copilots and top tech talent drive enterprise success?

RAG Architecture Flowchart

Here’s a quick flow of what happens in a RAG system:

[User Query]

[Embed Query as Vector]

[Retrieve Top k Documents via Vector Search]

[Augment Query with Retrieved Content]

[Generate Answer Using LLM]

[Deliver Final Response with Optional Citations]

A Simple Code Snapshot (Python-like pseudocode)

# Retrieve & Generate using RAG

query = "What’s our latest travel reimbursement policy?"

query_vector = embed(query)

relevant_docs = vector_db.search(query_vector, top_k=3)

prompt = f"""

Answer the question based on the following documents:

{relevant_docs}

Question: {query}

"""

response = llm.generate(prompt)

print(response)

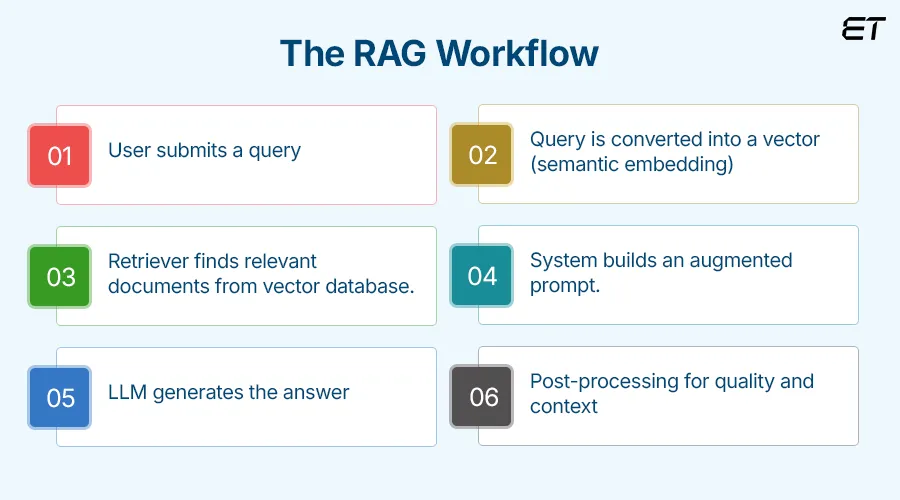

How Does RAG Work? A Step-by-Step Guide

To understand how Retrieval-Augmented Generation (RAG) works, think of it as a pipeline. Each part plays a specific role, and together, they help large language models (LLMs) deliver answers that are not just fluent but factually grounded in real data.

Whether you’re building an AI assistant for internal knowledge or powering a customer support bot, the RAG pipeline follows a predictable set of stages. Let’s walk through the steps.

Step 1: A User Asks a Question

Everything starts when a user inputs a query, like:

“What are the company’s cybersecurity protocols for remote employees?”

At this point, the system doesn’t immediately respond. Instead, it begins the retrieval and generation journey.

Step 2: Query is Converted into a Vector

Before the system can search for relevant documents, it first translates the user’s question into a vector, a numerical format that captures the semantic meaning of the text.

-

-

- This is done using an embedding model, such as OpenAI’s text-embedding-ada-002 or BERT-based encoders.

- The output is a high-dimensional vector (usually 384 to 1536 dimensions) that represents the context and intent behind the question.

-

Step 3: The Retriever Searches the Vector Database

The vectorized query is sent to a vector database, such as Pinecone, or other databases that we discussed above. They store pre-processed documents in vector format.

-

-

- The retriever compares the query vector to all document vectors in the database.

- It uses similarity scoring (like cosine similarity) to find the top-k most relevant results.

- These results typically consist of short text chunks, such as paragraphs or sections, extracted from larger documents.

-

For instance, if your HR document contains the line, “Employees working remotely must use a VPN,” and a user asks about remote security, the retriever will likely fetch this snippet, even if the user didn’t use the word “VPN”.

Step 4: System Builds an Augmented Prompt

Now that we have the most relevant pieces of information, it’s time to prepare a custom prompt for the LLM.

The system combines:

-

-

- The original question

- The retrieved context

- Optional instructions like “Answer only using the information”.

-

Here’s what an augmented prompt might look like:

Question: What are the cybersecurity policies for remote workers?

Reference Documents:

1. All employees must use multi-factor authentication when accessing the corporate network remotely.

2. VPN use is mandatory for all offsite logins to sensitive systems.

Answer based only on the above information.

This enriched prompt provides the LLM with the facts it needs, no guessing required.

Step 5: Generator (LLM) Creates the Final Response

The language model, such as GPT-4, Claude, or PaLM, now takes the augmented prompt and generates a coherent, context-aware response.

Since it’s working with actual retrieved content, the LLM is less likely to “hallucinate” or provide outdated answers. It acts more like a well-informed analyst than a general-purpose chatbot.

Some RAG systems are configured to include citations or mentions from which the document info came, adding transparency to the answer.

Step 6: Post-Processing For Quality and Trust

Some advanced RAG systems go a step further:

-

-

- Filter out irrelevant content if the retriever picked up noise.

- Summarize the retrieved documents if they’re too long for the LLM’s input size.

- Rank or re-rank results to ensure quality.

- Add metadata like timestamps or source types, for example, internal docs vs public reports.

-

This step helps ensure that the final output is clean, readable, and trusted by the end user.

Did you know that AI can predict and stop cyberthreats before they hit your enterprise system? Yes, you heard that right!

What Makes RAG Worth Your Strategic Attention

Now that we’ve understood how RAG works, let’s glance at why you should choose RAG.

1. Turn Static AI into a Knowledge Engine

Standard LLMs operate like “closed books”; they rely on training data that can go stale quickly. That’s a problem if your business is one where policies, markets, or customer needs change fast.

With RAG, you can:

-

-

- AI references live or regularly updated data without retraining.

- Whether it’s your HR policy, product catalog, or compliance docs, RAG can fetch and respond using the latest version.

-

According to Salesforce, 63% of employees report spending too much time searching for information. RAG cuts that time drastically by making data instantly accessible via natural language queries.

2. Maintain Control Over What the AI Says

In regulated industries, such as finance, insurance, or healthcare, data governance is everything.

In these kinds of scenarios, RAG allows you to:

-

-

- Choose which sources your AI uses to respond.

- Avoid hallucinations by limiting the LLM to your curated content.

- Easily audit and trace the source of the AI’s answers.

-

Instead of relying on generic internet data, you bring the model to your private, vetted knowledge base.

3. Save Time, Resources, and Training Costs

Looking to fine-tune a large model to your domain? It is expensive and time-consuming. Worse, you’ll need to do it again every time your data changes. But RAG brings you the solution!

RAG simplifies this:

-

-

- No need to retrain the LLM, just update the documents in the vector database.

- Reduces your ongoing infrastructure and operation costs.

- Works well with hosted LLM APIs (such as OpenAI or Anthropic), keeping your setup lightweight.

-

4. Enable Personalized, Context-Aware Conversations at Scale

RAG can deliver tailored answers based on your:

-

-

- Customer’s purchase history

- User’s recent activity

- Team’s department-specific guidelines

-

This level of awareness gives you several benefits, like:

-

-

- Better support experiences

- Higher customer satisfaction

- Stronger team productivity

-

For instance, an enterprise chatbot using RAG can pull a customer’s contract, service plan, and past interactions to answer “What’s included in my support package?”, something a basic LLM could never do.

5. Support Scalable Innovation Without Risking Accuracy

Many organizations fear going ‘all in’ on generative AI because of inconsistent answers or a lack of explainability.

However, with RAG:

-

-

- You build a system that adapts quickly to changing business environments.

- You maintain control and oversight over AI responses.

- You can even display sources in the output, boosting user trust.

-

This creates a scalable, low-risk pathway for deploying AI across various departments, including HR, Sales, Legal, Support, and more.

Ready to power your AI project with the correct programming language and talent? Learn with the experts now!

Real-World Use Cases

Let’s understand the practical use of RAG and how companies have benefited from it.

IBM Watsonx

The challenge IBM faced was that its enterprise clients who were using IBM Watsonx needed AI outputs that were explainable, auditable, and grounded in business-specific data.

Therefore, IBM integrated RAG architecture into Watsonx Assistant. Let’s see how it helped them solve their problem:

-

-

- Clients upload proprietary content (like user manuals or HR policies).

- The system retrieves exact document sections to support its answers.

- Answers are tagged with source references for traceability.

-

This helped their clients gain confidence in AI adoption with reduced hallucinations and complete transparency.

Salesforce Einstein Copilot

The challenge that Salesforce faced was that CRM users often needed contextual insights, such as customer history or policy information, without having to navigate multiple tabs or knowledge bases. But it was not possible with the basic tools.

Salesforce’s Einstein Copilot integrated RAG to overcome this issue. RAG helped it to:

-

-

- Pull relevant data from CRM fields, knowledge articles, and emails.

- Contextually respond to user queries within Salesforce.

- Surface suggested subsequent actions grounded in past customer interactions.

-

This helped Salesforce’s sales teams respond more quickly and accurately, improving productivity and customer satisfaction.

It’s high time to turn AI and LLM to your advantage now! So, what are you waiting for? Let’s build brilliance together.

RAG vs Other LLM Workflows

Before we wrap up, let’s glance at the differences between RAG vs Traditional LLM workflows and the pros and cons each workflow offers.

| Features | Traditional LLM | Fine-Tuned LLM | RAG-Enabled LLM |

| Knowledge Freshness | Static | Limited | Real-time from documents |

| Source Traceability | None | Limited | Full, via retrieved docs |

| Customization | Low | High | High (via your data) |

| Cost-efficiency | High (initial) | Expensive | High (no re-training) |

| Setup Complexity | Easy | Complex | Medium |

| Risk of Hallucination | High | Medium | Low |

Traditional LLM (Closed-Book Model)

These are off-the-shelf LLMs like GPT-4 or PaLM that operate based solely on their training data.

Pros and cons of Traditional LLM

| Pros | Cons |

| No setup required. | It can hallucinate or fabricate facts. |

| Great for general knowledge and creative tasks. | Its knowledge is static and has no access to updated or internal content. |

| – | There’s no way to control or verify sources. |

For instance, if you ask a basic LLM about your internal refund policy, it may provide you with a guessed answer that is either correct or incorrect.

Fine-Tuned LLMs (Domain-Specific Training)

In this model, you further train a base model using your company’s proprietary data.

Pros and Cons of Fine-Tuned LLM

| Pros | Cons |

| Custom-tailored knowledge is embedded into the model. | Requires lots of computing power and technical expertise. |

| Better alignment with tone and domain language. | Needs re-training whenever documents or policies change. |

| – | Still no direct source traceability. |

Tip: Training an enterprise-scale LLM can cost you between $100,000 – $1M+, depending on the data and infrastructure used.

Retrieval-Augmented Generation (RAG)

RAG sits in the sweet spot between simplicity and sophistication.

Pros and Cons of Retrieval-Augmented Generation (RAG)

| Pros | Cons |

| Pulls up-to-date info from your own documents. | Additional setup is required, such as indexing and retrieval pipeline. |

| Doesn’t require re-training. | Needs clean, well-structured content for best results. |

| Offers source citations, reducing hallucinations. | – |

| Works with hosted LLMs like OpenAI, Anthropic, Cohere, etc. | – |

For example, your AI assistant retrieves your updated employee handbook and provides a real-time answer, accompanied by a link to the source.

Wrapping Up!

Generative AI alone can impress. However, without facts to back up its words, it risks leading you astray. RAG closes that gap for you. It transforms AI from a tool that guesses to a system that knows, drawing on your business’s most trusted data in real-time.

If you’re someone aiming to scale AI without sacrificing accuracy or control, but don’t know where to start, then this is for you. Connect with our experts for a hassle-free experience and give RAG your best shot. It’s the foundation for AI that earns trust, supports decisions, and keeps pace with your world.

Supercharge your business with AI + LLM solutions, and let’s create something exciting and huge!

Frequently Asked Questions

1. How does RAG work technically?

RAG works by combining search and generation within a single system. First, it turns your question into a vector (a numeric format that captures meaning). Then it searches a database of document vectors to find the most relevant information. Finally, it feeds both the question and the found content to an AI model, so the answer is accurate and based on real data.

2. How does RAG work step by step?

RAG follows a simple flow:

-

-

- It converts your question into a vector.

- It finds matching documents from a vector database.

- It creates a prompt that combines your question with the relevant documents.

- It generates a clear answer using the AI model.

-

Each step ensures the correct information backs the response.

3. Does ChatGPT use RAG?

The regular ChatGPT doesn’t use RAG on its own. However, with plugins, APIs, or custom setups, it can function like a RAG system by pulling in external information before responding.

4. Is RAG a grounding technique?

Yes! RAG is a grounding technique because it helps AI provide answers based on real, trusted sources, rather than relying solely on memory.