Mastering Performance Testing: A Detailed Walkthrough

Guaranteeing that the product will function as planned when it is introduced to the market, is a crucial step in the product development process!

Software applications released in the market with subpar performance metrics risk failing to meet sales targets and getting a negative reputation. Performance testing aims to remove specific performance bottlenecks that may slow an application’s performance.

A very simple example of performance testing is examining the application’s ability to manage thousands of users signing in simultaneously or even thousands of users using the app and doing various tasks.

According to Statista, the number of downloads for mobile app development is rising annually. However, this does not imply that you can easily attract users to your application. Acquiring new leads requires time; a website or application that loads slowly can quickly lose users.

Automated performance testing ensures that your application won’t crash when several users visit it simultaneously. It also assists you in fixing bugs and supporting market claims.

By continuously providing excellent performance, this proactive strategy not only reduces the time and expenses related to post-launch fixes but also fosters customer trust.

Let’s quickly go over some incredible advantages of this strategy:

- Identifies bottlenecks to enhance application response times.

- Ensures systems handle high loads without crashing.

- Delivers a smoother, faster experience for users.

- Prevents costly downtime and performance issues.

- Provides data on how well the system scales with increased traffic.

- Helps detect potential issues before they impact production.

After reading these benefits, it is clear that one of the cornerstones of creating outstanding software is implementing a successful performance testing strategy, as this impacts user acceptance and advocacy.

Let’s explore this detailed guide covering performance testing, its concept, objectives, and everything you must know!

With the knowledge we’ll present in this post, you’ll be able to make wise choices that will increase the reliability and efficiency of your systems and enhance the user experience.

What is Performance Testing?

As the name implies, performance testing is a procedure to evaluate how well your application performs. It’s a software testing used to assess a program’s performance under various scenarios. In this context, performance encompasses several factors, including responsiveness, speed, scalability, and stability under varying traffic and load conditions.

Employing this tactic, a leading QA and software testing service provider may guarantee software quality and prepare an application for market release.

During performance testing, products (software applications) undergo simulation settings for real-world usage. Then, the product’s behavior is observed and examined to assess its functionality, spot any problems or bottlenecks, and determine its capacity to manage the anticipated workload.

Let’s understand performance testing in software testing with a simple example:

Think of an online store getting ready for Black Friday deals. In this case, performance testing involves creating virtual users to simulate thousands of concurrent consumers, pushing the site over typical traffic limitations, and finding potential problems. So, what needs to be done here?

- Simulated high-traffic scenarios to guarantee the site manages enormous user loads successfully.

- Determining whether increasing the number of servers can meet the demand.

- Guaranteeing the stability of the website throughout prolonged periods of high traffic.

All the above tests assist in locating and fixing performance problems, ensuring that the website functions consistently during high-traffic periods and offers customers a seamless experience.

What evaluation criteria are used in performance testing?

Many organizations are confident that their products are high quality, and there is no need to check their caliber repeatedly. But if you are not checking the performance of your software before hitting the market, then I must say you are shooting in the dark.

Why am I stating this?

You will better comprehend it when you understand the intent of performance testing or what performance testing measures.

Your software can only be as well-liked and reliable as its functionality permits. So here are the main goals of performance testing that you must know:

Key Objectives of Performance Testing

Assess Speed and Responsiveness |

Evaluate how quickly an application responds to user inputs and processes transactions, ensuring it meets performance expectations under normal and peak conditions. |

Ensure Stability |

Verify that the application remains stable and functional under varying loads, including normal, peak, and stress conditions, to prevent crashes and downtime. |

Validate Reliability |

Ensure the application performs consistently over time, including during long-term usage or continuous operation, to identify issues like memory leaks or resource exhaustion. |

Measure Scalability |

Test how well the application scales with increased user loads and data volumes, ensuring it can handle growth without degradation in performance. |

Improve User Experience |

Address any performance issues that could negatively impact user satisfaction and engagement to guarantee a seamless and responsive user experience. |

Identify Bottlenecks |

Detect and diagnose performance issues such as slow queries, resource limitations, or inefficient code that could hinder the application’s performance. |

Optimize Resource Usage |

Analyze how efficiently the application utilizes system resources, such as CPU, memory, and network bandwidth, to enhance overall performance and reduce operational costs. |

Now that you know why performance testing is necessary, it’s also important to know when to perform it to achieve the desired results.

When to Use Performance Testing: Key Scenarios and Triggers

Performance testing is only used when the application is functionally stable, as it is non-functional testing. It must be done during the development and deployment stages to guarantee application dependability.

During the development phase, performance testing helps identify and resolve issues early, such as slow response times or bottlenecks, ensuring the application performs well before reaching users.

Once the software has reached its final form, it moves on to the deployment process. After downloading the app, many users—typically hundreds or thousands—begin using it. Therefore, it’s critical to monitor the performance at this point.

Moreover, performance testing must be used in the scenarios given below to ensure reliability, stability, and a positive user experience.

- Before Launching a New Application: To ensure the application can handle expected user loads and performs well under various conditions to avoid issues post-launch.

- During Peak Usage Periods: To evaluate the system’s ability to manage high traffic volumes during promotional events or seasonal spikes.

- After Major Code Changes: To test performance following significant updates or new features to identify and address potential performance impacts.

- Before Scaling Up: To assess the application’s performance when adding more resources or expanding infrastructure to ensure it scales effectively.

- Post-System Integration: To verify performance after integrating with other systems or services to detect adverse effects on application performance.

- In Response to User Feedback: To address performance complaints or issues users report to improve the overall user experience.

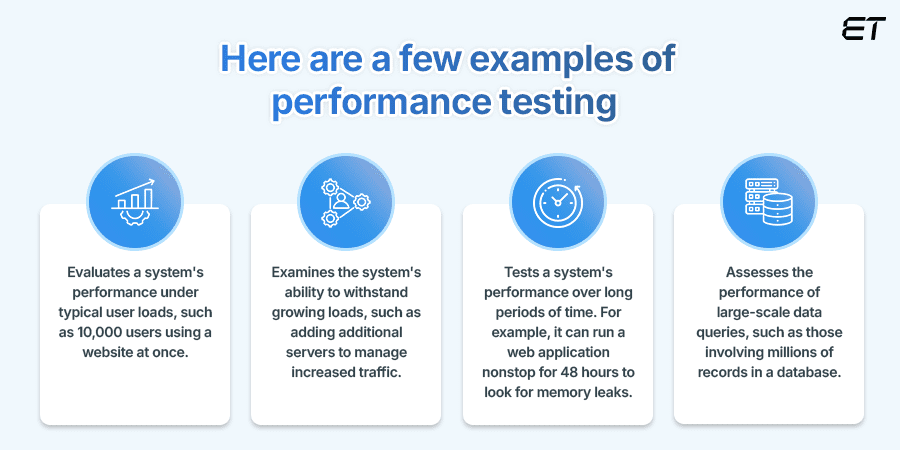

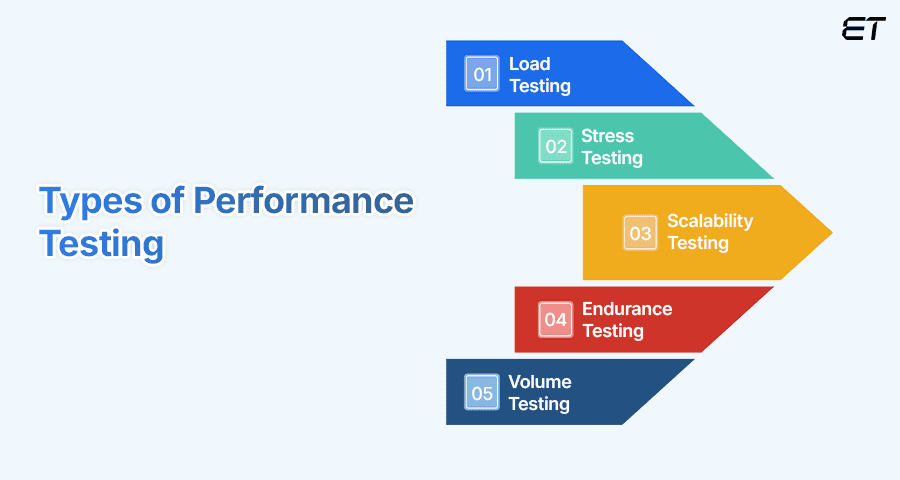

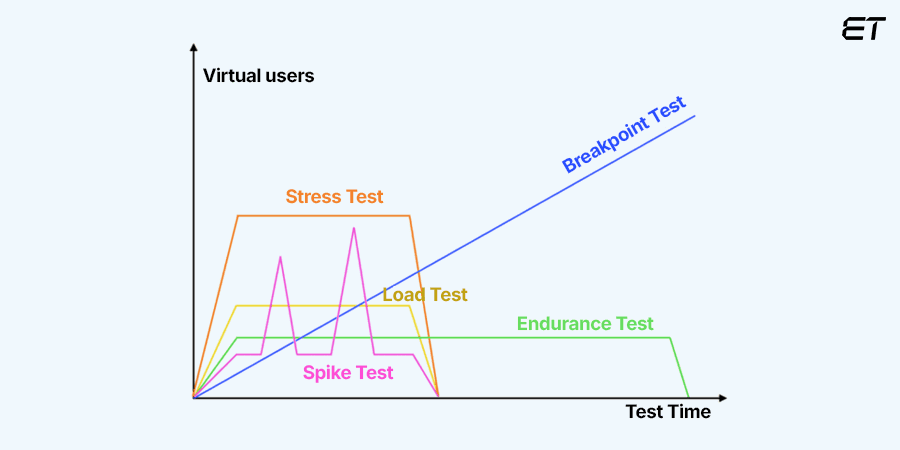

Understanding the Various Types of Performance Testing

There are many types of performance testing, and they all focus on distinct facets of an application’s performance. While some tests are helpful for ad hoc edge case testing, others are meant to evaluate baseline performance. Let’s explore all of them.

-

Load Testing

Load testing measures how an application performs under expected user loads. It assists in identifying bottlenecks and figuring out how many users or transactions the system can process at once. The goal is to find performance bottlenecks before the software product is released into the market.

There are two types of load testing:

Spike Testing

This type of load testing examines how well the system can manage unexpected surges in traffic. Its main objective is to evaluate the application’s response to a sudden, significant rise in load caused by users.

Soak Testing

Soak testing involves running an application under normal load for an extended period to identify issues like memory leaks, performance degradation, or resource exhaustion over time.

-

Stress Testing

This type of testing checks the application’s typical capability to operate. The primary goal of stress testing is to identify the software application’s primary breaking point. It entails putting a product through rigorous testing to determine how well it can handle heavy traffic.

-

Scalability Testing

Scalability testing measures how well a software program can scale up to accommodate a rise in user load. The primary goal is to determine whether the system will effectively respond to increasing data and traffic. Usually, the objective is to estimate the cost of scaling up infrastructure.

Scalability testing includes:

- Vertical Scaling: Evaluates performance improvements by adding resources (CPU, memory) to a single server.

- Horizontal Scaling: Tests how well the system handles load distribution by adding more servers or instances.

- Auto-Scaling: Automatically assesses the system’s ability to adjust resources based on current demand.

-

Endurance Testing

Although endurance testing is quite similar to soak testing, it focuses on the system’s long-term behavior under a steady load. Monitoring the application’s stability may entail running it nonstop for a few days. It analyzes the application’s performance over a long period of time, looking for problems such as memory leaks or a decline in performance.

-

Volume testing

Volume testing involves storing a large amount of data in a database and observing how the software system behaves overall. The aim is to determine how well the application performs on various database volumes. For example, it involves querying a database with millions of records to ensure performance remains acceptable under high data loads.

How Do You Select the Performance Testing Method for Your Application?

To decide the right type of performance testing for your project, start by defining your objectives. Then, assess the specific requirements of your project, such as peak usage patterns, expected growth, and potential stress scenarios, to choose the most suitable testing type.

Expert software testing companies suggest:

- It is best to perform load testing for average user loads.

- Stress testing will be useful to determine the system’s breaking point.

- To ensure scalability as traffic grows, pick scalability testing.

- Endurance testing is essential for long-term stability.

- Volume testing is helpful if managing enormous amounts of data is an issue.

Basically, your objective determines the kind of performance test to use. Considering the previous example, an e-commerce application should evaluate its performance before Black Friday using a spike test instead of a load or endurance test.

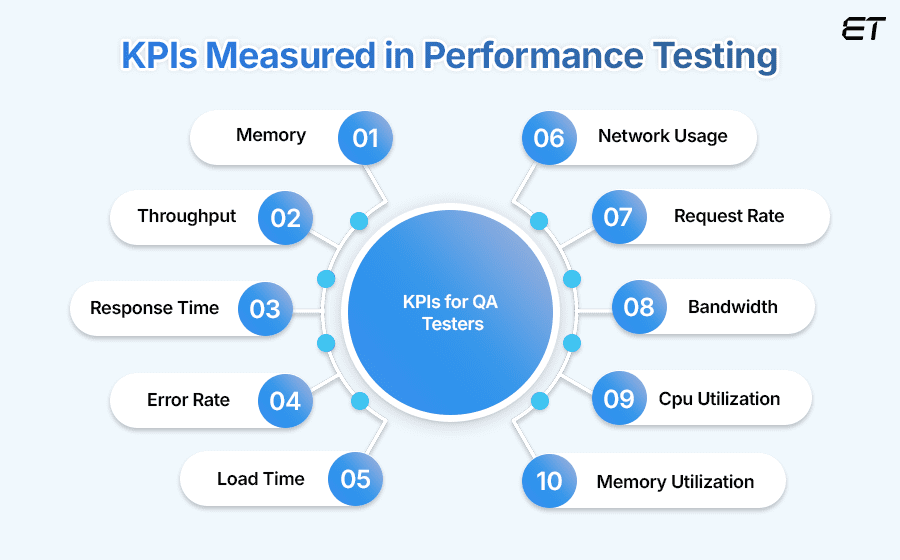

Top Performance Testing Metrics to Track for Optimal Application Performance

Performance metrics used in performance testing are quantitative measurements that are used to appraise and analyze an application’s overall efficacy, responsiveness, and efficiency under various circumstances. These metrics offer valuable insights into the application’s performance in relation to its predetermined performance requirements.

When assessing the outcomes of testing, the following measures, also known as Key Performance Indicators (KPIs), are useful:

-

Memory

This indicates how much RAM the system is currently utilizing. It is used to find any possible memory-related problems that might be causing performance issues.

-

Throughput

Refers to the quantity of requests or transactions that the system handles in a specific time frame. Increased throughput signifies enhanced system efficiency and capacity.

-

Response Time

Measures the time a system takes to respond to a request, indicating the speed and efficiency of the application. Lower response times generally reflect better performance and user experience.

-

Error Rate

The percentage of requests resulting in errors compared to the total number of requests. This metric includes:

- Total Errors: The absolute number of failed requests.

- Error Rate Percentage: The proportion of errors relative to total requests, providing insight into application reliability.

-

Load Time

The amount of time it takes for a page or program to load is known as the load time. It’s used to find any possible problems that might be contributing to sluggish website loads.

-

Network Usage

Measures the amount of data sent and received over the network during performance testing. Efficient network usage ensures optimal data transfer rates and minimizes congestion.

-

Request Rate

It checks the number of requests the system gets in a given amount of time. A higher request rate indicates increasing demand and provides insight into how well the system manages heavier loads.

-

Bandwidth

The maximum rate at which data can be transmitted over the network. Sufficient bandwidth is essential for handling high volumes of traffic and maintaining performance.

-

CPU & Memory Utilization

Evaluates the application’s proportion of CPU and memory usage. Optimal utilization keeps the system from overloading and guarantees effective resource consumption. If CPU utilization rises, it may indicate a possible problem causing high CPU utilization and degrading performance.

Find The Best Performance Testing Tools for 2024

The market is filled with various performance testing tools. Choosing a tool that fits your needs is crucial if you want to get the desired results. Here is a list of the testing tools that are most frequently utilized.

| Testing Tools | Description |

| Apache JMeter | It is an open-source tool for load testing and performance measurement, widely used for its flexibility and ease of use. It supports various protocols, such as HTTP, FTP, and JDBC. |

| Locust | It is an open-source tool for load testing written in Python. It allows for scalable and distributed testing and focuses on ease of use and customization. |

| Gatling | An open-source tool designed for high-performance load testing with a focus on HTTP protocols. It offers powerful scripting capabilities and excellent reporting features. |

| Dynatrace | It offers end-to-end performance monitoring and testing, AI-driven insights, and automated root cause analysis for infrastructure and application performance. |

| K6 | It is an open-source, developer-friendly performance testing tool that focuses on ease of use and integrates well with modern DevOps workflows. It supports scripting in JavaScript. |

| BlazeMeter | It is a cloud-based performance testing tool that integrates with Apache JMeter and offers scalable load testing, real-time reporting, and seamless CI/CD integration. |

| NeoLoad | It is a performance testing tool by Neotys, designed for web and mobile applications. It supports continuous testing with integration into CI/CD pipelines and provides in-depth analytics. |

| WebLoad | It is a commercial performance testing tool that supports a wide range of protocols, provides powerful scripting capabilities, and provides real-time analytics. |

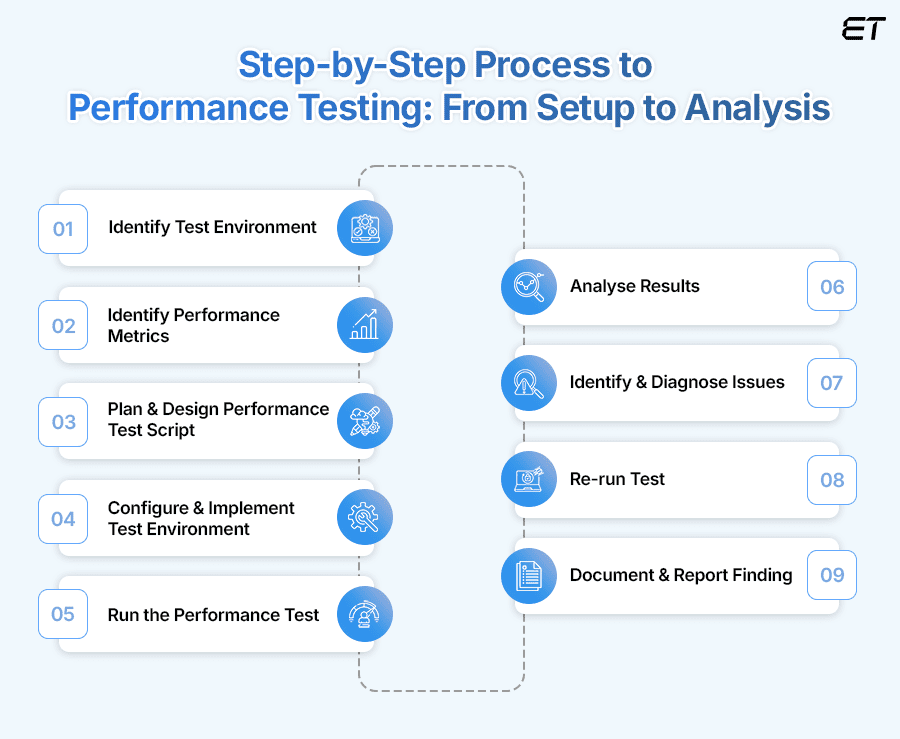

How to Conduct Performance Testing: Essential Steps for Accurate Results

Performance testing is crucial for ensuring that an application performs well under various conditions. Here’s a structured approach used by our proficient testing team to conduct performance testing effectively. By following these steps, you can ensure that your performance testing is thorough and effective, ultimately leading to a more reliable and efficient application.

-

Identify Test Environment

Start by outlining the goals of your performance evaluation precisely. Decide what you want to accomplish, such as verifying system stability, scalability, or response times. Then, outline the scope of the testing process by specifying which application components, features, and user scenarios will be tested to ensure that every important facet of the application gets assessed.

Objectives might include:

- Ensuring the application handles peak loads efficiently.

- Validating that response times meet performance criteria.

- Identifying performance bottlenecks.

The scope must include:

- Key functionalities and workflows.

- Different user roles and scenarios.

- Integration points with other systems.

-

Identify Performance Metrics

Decide on the objectives and metrics that will be used to measure the tests’ performance. Establish the constraints, objectives, and cutoff points to prove the test’s success. The project specifications will serve as the primary source of criteria, but testers should have sufficient authority to establish a more comprehensive collection of tests and benchmarks.

According to the eLuminous testing team, the simplest approach to accomplish this is to refer to the project specs and software expectations. As a result, our testers can define acceptable system performance by determining test metrics, benchmarks, and thresholds.

-

Plan & Design Performance Test Script

Consider how usage will inevitably vary, and then design test cases that cover every possible use case. Determine the metrics that need to be recorded and design the tests appropriately. In this phase, we will access the test server and install the necessary tools on the Test Engineer Machine. After that, we’ll run the tool by writing a script based on the test cases.

We will move on to the next phase after finishing the script.

-

Configure & Implement Test Environment

After the test scripts have been written, the testing environment will be set up prior to execution. This requires Configuration Management, which involves managing and controlling the setup of test environments, including hardware, software, network settings, and test data. It ensures consistency and reproducibility of test conditions, facilitates accurate comparisons of performance results, and simplifies troubleshooting and optimization processes.

Ensure the test environment closely mirrors the production environment. This includes:

- Hardware: Match the specifications of production servers, including CPU, RAM, and storage.

- Software: Use the same operating system, application servers, databases, and middleware versions as in production.

- Network: Configure network settings, bandwidth, and latency to replicate production conditions.

-

Run the Performance Test

Run the previously designed test properly. Use parallel testing to perform multiple tests simultaneously to save time without affecting the accuracy of the results.

Conduct the performance tests according to your test cases. This involves:

- Load Testing: Gradually increase the load to assess system performance under varying conditions.

- Stress Testing: Push the system beyond its limits to identify breaking points and observe system behavior under extreme stress.

- Endurance Testing: Run tests over an extended period to evaluate how performance holds up over time.

-

Analyse Results

Gather performance data from the tests, including metrics such as response times, error rates, and resource usage. Ensure that the data is comprehensive and covers all aspects of the test scenarios.

Then, analyze the collected data to evaluate performance against your benchmarks. Look for:

- Performance Trends: Identify trends and patterns in the data.

- Bottlenecks: Detect any performance issues or bottlenecks that affect system efficiency.

- Deviations: Compare actual performance against expected results and investigate any deviations.

-

Identify & Diagnose Issues

The bottleneck (bug or performance issue) will now determined. The bottleneck may arise due to several factors, such as

- programming errors

- hardware malfunctions (hard drive, RAM, processor)

- network problems.

- an operating system software problem.

After identifying the bottlenecks, we’ll resolve them. Then, we’ll rerun the test to verify that the software is operating at peak efficiency and that the code is clear after we fix the performance issues.

-

Re-run Test

After the bottlenecks are fixed, we rerun the test scripts to see if the outcome satisfies the desired outcome. We also rerun the performance tests to confirm that performance has increased and that the optimizations have fixed the problems. To verify improvements, we compare the current results with the earlier benchmarks.

-

Document & Report Finding

Create a comprehensive report for stakeholders, summarizing the test results, issues encountered, and actions taken. Provide actionable insights and recommendations to guide further improvements.

Prepare detailed documentation of the performance testing process, including:

- What was tested and how?

- Results and benchmarks.

- Bottlenecks and their resolutions.

- Suggested improvements and optimizations.

Based on this report, the tester makes the decision whether to conduct tests again or not!

Essential Checklist While Running Performance Tests

The following are some recommended strategies for carrying out impactful and effective performance testing:

Start Early: Incorporate performance testing early in the development lifecycle to identify and address issues sooner. This “shift-left” approach helps avoid costly fixes and ensures that performance considerations are integrated into the design and development phases.

Diversify Test Scenarios: Include a variety of test scenarios, such as load testing, stress testing, endurance testing, and spike testing. This breadth ensures that the application is tested under various conditions and helps identify different types of performance issues.

Select Appropriate Tools: Utilize performance testing tools that align with your application’s technology stack and testing needs. Tools like Apache JMeter, LoadRunner, Gatling, and others offer various features for different types of performance tests.

Ensure Accurate Test Environments: Set up test environments that closely replicate the production environment in terms of hardware, software, and network configurations. This ensures that test results are accurate and relevant.

Gradually Increase Load: Begin with light loads and progressively increase the intensity. This gradual approach helps in understanding performance thresholds and identifying bottlenecks more effectively.

Is Performance Testing Worth It? Exploring the Pros and Cons

Everything comes with some good and bad effects. Same with this strategy!

Performance testing is essential for ensuring software stability, reliability, and scalability under load. It identifies bottlenecks, improves user experience, and prevents revenue loss by minimizing downtime. However, it can be time-consuming, expensive, and require specialized skills and tools.

Here’s a concise tabular presentation of the pros and cons, must go through it before making any final decision!

| Pros | Cons |

| Reduces Downtime: Proactively addresses potential issues before they impact users. | Environment Variability Issues: Differences between testing and production environments can affect the accuracy of results. |

| Enhances Security: Tests how the system behaves under load, helping to prevent security breaches. | High Cost of Setup and Maintenance: Initial setup and ongoing maintenance require substantial investment. |

| Ensures Stability and Reliability: Verifies the system can handle the expected load without failures. | Extensive Data Analysis Required: Interpreting performance test results can be complex and time-consuming. |

| Prevents Revenue Loss: Minimizes downtime and performance-related losses, protecting revenue. | Difficult to Simulate Real-World Conditions Fully: It’s challenging to replicate all potential user interactions and conditions. |

| Facilitates Capacity Planning: Aids in understanding hardware and software needs for future growth. | Overemphasis on Performance Over Other Factors: Can overshadow other critical aspects of development, such as functionality and user interface. |

| Improves User Experience: Ensures applications run smoothly and responsively. | Requires Specialized Tools and Skills: Needs expertise and tools that can be expensive. |

Automated Performance Testing for Faster, Reliable Software Releases

Every experienced performance tester tries to prevent the Agile development process from developing bottlenecks. This must be prevented by automatically running tests and, whenever feasible, automating design and maintenance tasks.

Automating performance testing involves creating a reliable and consistent procedure that verifies reliability at various phases of the development and release cycle. For example, you might manually start load tests and track their effects in real-time, or you could use CI/CD pipelines and nightly processes to run performance tests.

Automation in performance testing does not eliminate the requirement for manual test execution. It has to do with organizing performance tests for continuous performance testing as a component of your Software Development Life Cycle (SDLC).

Incorporating automation into performance testing’s main objective is to increase productivity and accelerate the entire process. But there’s more to it!

Benefits of Automated Performance Testing:

- Speeds up testing processes and reduces manual effort.

- Ensures repeatable and accurate results.

- Easily tests different scenarios and load levels.

- Lowers long-term testing costs by minimizing manual work.

- Identifies issues early in the development cycle.

- Tests various performance aspects systematically.

With these benefits, no one can deny that automating testing at every step of the application lifecycle is the future of performance testing.

Performance Testing Tips for Web & Mobile Applications: Notes From Experts!

The quality of the application’s code is not the only factor that affects how well a web or mobile app performs; other external factors also play a role. For example, server infrastructure is essential. Inadequate setups or insufficient server resources could cause a slow website. When housed on a poor server, even the most optimized website might suffer.

Testing the performance of Web and mobile applications can make all the difference in the user experience. Therefore, performance problems can be found and fixed before they adversely impact users’ experience.

Although the process of performance testing is quite similar for both web and mobile apps, it starts by defining metrics like load time and responsiveness, simulating various user loads, and monitoring performance under stress. Then, the results are analyzed to find bottlenecks, optimized accordingly, and retested to ensure improvements.

But do you know there are a few essential considerations to get the most out of these processes? What are those? Don’t worry—we have covered everything for you!

Here are a few tried-and-true tips for web and mobile app performance testing from the desk of the skilled QA team at eLuminous:

Performance Testing Result-Driven Tips for Web Applications

- Pay attention to essential indicators like battery consumption, response times, load times, and network utilization.

- Utilize performance testing tools like Appium, Firebase Performance Monitoring, or TestComplete for accurate insights.

- Test various devices, OS versions, and network configurations to account for user experiences.

- During testing, keep an eye on CPU, memory, battery, and network utilization to spot resource limitations.

- To determine areas for improvement, use performance data to pinpoint slowdowns, crashes, and bottlenecks.

- Put performance improvements into practice and retest to make sure the modifications have a good effect on performance.

- Incorporate feedback from real users to address performance issues and enhance the user experience.

- To verify stability, evaluate the app’s performance under extreme load and irregular usage patterns.

- Use real-time monitoring tools to keep your app running at its best and identify performance problems early on.

Performance Testing Result-Driven Tips for Mobile Applications

- Define what to measure—load times, responsiveness, battery usage, and network performance.

- Use tools like Appium, Firebase Performance Monitoring, or LoadRunner for comprehensive testing.

- Test under different network conditions (e.g., 3G, 4G, Wi-Fi) and user scenarios to reflect real-world usage.

- Use monitoring tools for ongoing performance tracking to detect and resolve issues proactively.

- During tests, track CPU, memory, battery drain, and network usage to identify inefficiencies.

- Evaluate app performance under peak load conditions to ensure stability and scalability.

- Utilize feedback from real users to address performance concerns and improve the overall experience.

- Make improvements based on findings, then retest to validate the effectiveness of optimizations.

Summing Up: Enhance Your Software Scalability With Performance Testing

After understanding the concept of Performance testing, I am sure you are with me!

Overall, this strategy is an essential phase in product development and quality control, assisting in ensuring they function well.

A well-thought-out performance testing strategy is essential for ensuring an application’s success. It assists in pinpointing regions that need improvement, evaluating the application’s scalability, and ensuring that it satisfies user needs.

However, to enable your applications to function in real-world scenarios and provide a flawless user experience, you must properly comprehend and implement the many types of performance testing. Therefore, to properly utilize this tactic, one must seek professional advice.

Seeking for a Partner in Performance Testing? Ensure your software performance with our dedicated developers and testers team, who possess extensive knowledge in creating application performance tests based on a unique, robust method.

Check out our recent customers’ reviews on the Clutch platform!

Frequently Asked Questions

Can you give one example of Performance Testing?

One example of performance testing is load testing, in which the application is subjected to a simulated high volume of user traffic to evaluate how well it handles peak loads and identify potential bottlenecks or performance degradation.

Does performance testing require coding?

Performance testing often requires coding, especially when configuring complex test scenarios or scripting automated test cases. However, some tools offer a user-friendly interface for basic testing without extensive coding. Advanced performance testing typically involves coding to customize tests and analyze detailed results.

How do you plan a performance test?

You should plan a performance test by defining objectives, identifying key scenarios, setting performance metrics, designing test cases, selecting tools, creating a test environment, executing tests, and analyzing results for optimization. If you need help, connect with a proficient testing team.