Python Multithreading vs Multiprocessing – The Key to High Performance in Modern Enterprises

You have scaled your Python applications, migrated them to the cloud, and automated your workflows, yet performance still lags. The culprit? Often, it is not your infrastructure or technology stack, but the concurrency model that governs how your systems execute tasks.

Did you know that over 70% of developers worldwide use Python, according to the latest Stack Overflow Developer Survey? From machine learning to web scraping to automation, Python drives some of the most resource-intensive enterprise workloads today.

But as systems grow in scale and complexity, one important question arises: should an enterprise use python multithreading vs multiprocessing?

It is a challenge every CTO, architect, and backend developer faces when optimizing for speed and cost. While both models promise faster execution, they behave very differently, and the wrong choice can quietly drain computing power, inflate cloud costs, or disrupt critical workloads.

Understanding python multithreading vs multiprocessing is a strategic choice. Let’s break down how they work, where each one excels, and how to apply them effectively to drive enterprise performance, scalability, and ROI.

How the GIL Impacts Python Multithreading vs Multiprocessing Performance

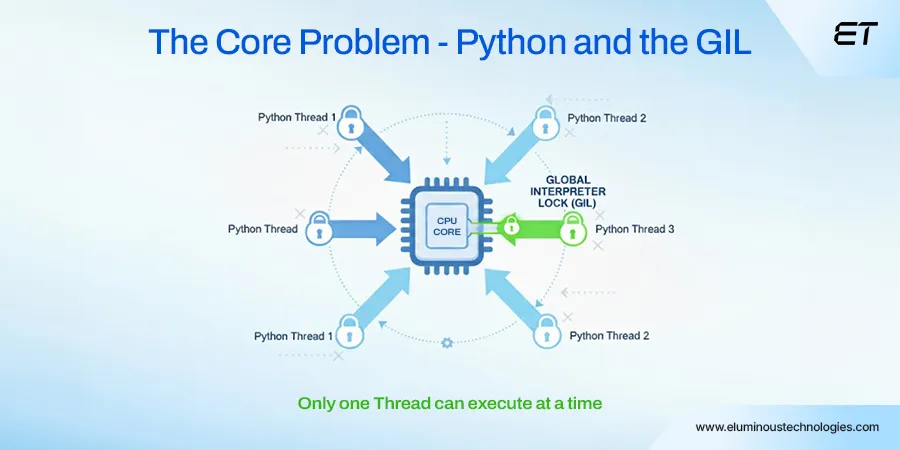

Before we compare threads and processes in python multithreading vs multiprocessing, we need to discuss a concept that defines Python’s behavior: the Global Interpreter Lock (GIL).

The GIL is a mutex (mutual exclusion lock) that allows only one thread to execute Python bytecode at a time. Even if your system has eight CPU cores, Python can only execute one thread’s instructions at once in the standard CPython implementation.

This means that true parallel execution of Python code using threads is not possible for CPU-bound tasks (tasks that use the processor heavily, like image processing, encryption, or mathematical computations).

However, threads can still provide massive performance improvements in I/O-bound tasks, where the CPU often waits for external resources (like files, APIs, or databases).

- Multithreading = great for I/O-bound work

- Multiprocessing = ideal for CPU-bound work

Let’s now explore how each one works and when to use each one in python multiprocessing vs multithreading scenarios.

Python Multithreading – One Brain, Many Hands

Imagine you are in a restaurant kitchen. One chef (the CPU) is cooking, but several assistants (threads) are chopping vegetables, washing dishes, and preparing ingredients.

While the chef cooks, assistants can handle the other tasks.

This involves multithreading vs multiprocessing Python, where multiple threads share the same memory space and resources.

In Python, the threading module lets you spawn multiple threads that run concurrently. Although the GIL restricts true parallel CPU execution, threads can still overlap I/O operations effectively.

Example: Downloading Multiple Web Pages

import threading

import requests

import time

urls = [

"https://example.com",

"https://httpbin.org/delay/2",

"https://python.org",

]

def fetch(url):

print(f"Starting {url}")

requests.get(url)

print(f"Finished {url}")

start = time.time()

threads = []

for url in urls:

t = threading.Thread(target=fetch, args=(url,))

t.start()

threads.append(t)

for t in threads:

t.join()

print(f"Total time: {time.time() - start:.2f} seconds")

Even though each request might take a few seconds, threads overlap the waiting time, allowing downloads to complete significantly faster than they would in sequential execution.

While optimizing performance is crucial, ensuring your systems stay secure is equally important. Python is also transforming how enterprises defend against cyber threats.

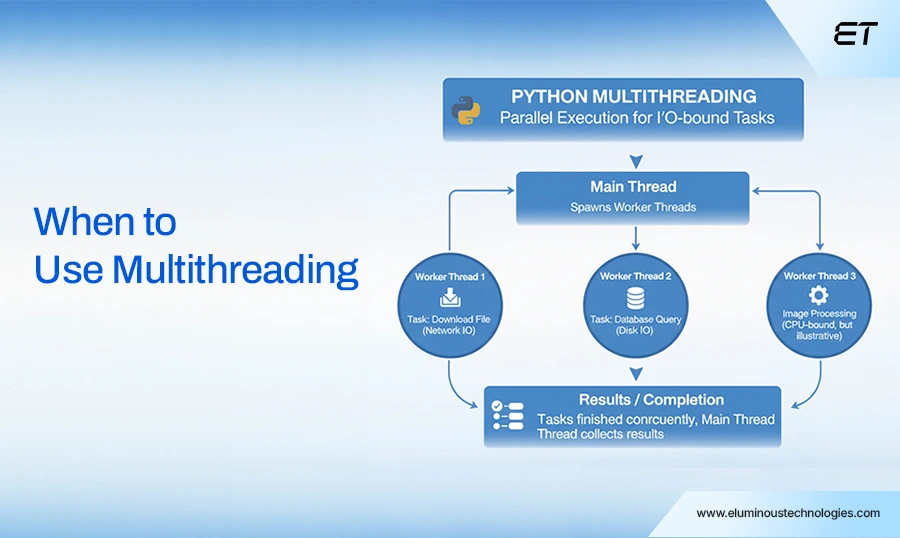

When to Use Multithreading?

Choosing python multithreading vs multiprocessing depends on your system’s concurrency model and workload type. For enterprises handling large-scale integrations, real-time transactions, or multi-user applications, understanding where threads deliver ROI is essential.

Best for I/O-Bound Workloads

Multithreading shines when your application spends more time waiting for I/O operations, network calls, disk reads, or responses from external systems than performing computations.

In an I/O-bound scenario, the Global Interpreter Lock (GIL) is not a bottleneck, because while one thread waits for an external operation, another can continue execution. This allows multiple threads to make progress concurrently, resulting in significantly improved throughput.

-

Web Scraping & Data Extraction

Multithreading can accelerate web crawlers and API scrapers by up to five times compared to sequential execution.

For instance, a data aggregator fetching 10,000 URLs using Python’s threading or concurrent.futures.ThreadPoolExecutor can complete in under 3 minutes, versus over 15 minutes sequentially.

-

File I/O (Reading/Writing Large Volumes of Data)

Enterprise ETL pipelines often read, transform, and store terabytes of data. Using python multithreading vs multiprocessing for parallel read/write operations can cut ingestion times by 30–45%, depending on storage type (SSD, NAS, etc.).

-

API Request Handling and Microservice Communication

Modern architectures depend heavily on REST and GraphQL APIs. Multithreading enables the simultaneous processing of concurrent outbound API calls or incoming requests.

For example, fintech transaction gateways using threaded API calls can process 1.8x more concurrent requests per second under the same compute budget.

-

Real-Time Socket Connections & Message Queues

In IoT ecosystems or chat applications, thousands of persistent socket connections must remain active.

Threads handle asynchronous message exchange without CPU blocking. Python frameworks, such as Twisted or asyncio-based hybrid threading models, can handle up to 25,000 open connections with moderate CPU utilization.

-

Real-Time User Interface Updates

Enterprise monitoring dashboards (for DevOps or security) often rely on threads to ensure responsive UIs.

The main thread handles rendering, while background threads continuously fetch logs, metrics, or alerts, ensuring smooth interactivity even under heavy load.

Avoid Multithreading for CPU-Bound Tasks

While threads are excellent at managing multiple concurrent I/O operations, they do not offer true parallelism for CPU-heavy tasks in Python due to the Global Interpreter Lock (GIL).

When threads perform intensive computation, such as matrix multiplication, encryption, or data transformations, the GIL forces only one thread to execute at a time, leading to negligible or even degraded performance.

Enterprise Takeaway

In large-scale enterprise systems, python multithreading vs multiprocessing decisions deliver tangible benefits when:

- The workload involves network latency or I/O delays (API-heavy systems, data acquisition layers).

- You need scalable concurrency with minimal memory overhead (e.g., 10,000+ lightweight tasks).

- Your infrastructure relies on real-time data feeds where responsiveness matters more than raw compute throughput.

However, for workloads that involve numerical intensity, analytics computation, or ML workloads, multithreading can become a silent performance trap. The right architectural decision is often to combine models:

- Threads for network concurrency

- Processes for computational parallelism

- Async I/O for scalable event-driven workloads

In short:

- Multithreading is about doing more things while waiting.

- Multiprocessing is about doing more things at once.

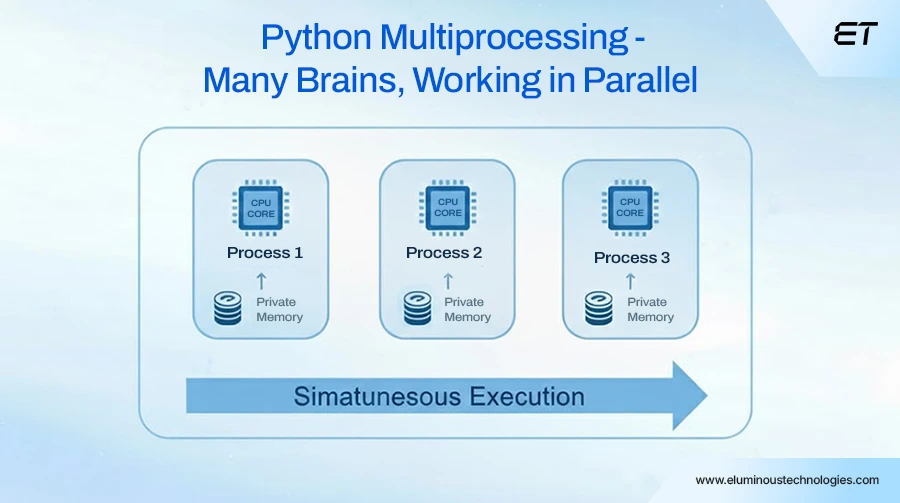

Python Multiprocessing – Many Brains, Working in Parallel

In enterprise environments, performance bottlenecks are not just inconvenient; they are financial liabilities. Whether it is an analytics engine crunching billions of data points, an AI model processing real-time inputs, or a financial risk simulation running on tight SLAs, speed and scalability directly impact business outcomes.

This is where Python’s multiprocessing steps in, allowing organizations to unlock the full power of their hardware, using every CPU core to execute tasks truly in parallel.

In Python, the multiprocessing module spawns multiple processes, each with its own Python interpreter and memory space. Since each process has its own GIL, they can execute code in true parallelism across multiple CPU cores.

Example - Squaring Large Numbers

from multiprocessing import Pool

import time

def square(n):

return n * n

numbers = range(10_000_000)

start = time.time()

with Pool() as pool:

results = pool.map(square, numbers)

print(f"Execution time: {time.time() - start:.2f} seconds")

When run on a quad-core machine, this multiprocessing version can be up to 3.5x faster than sequential computation.

Curious how Python compares with next-gen languages built for speed and data science? Dive deeper into the Julia vs Python showdown.

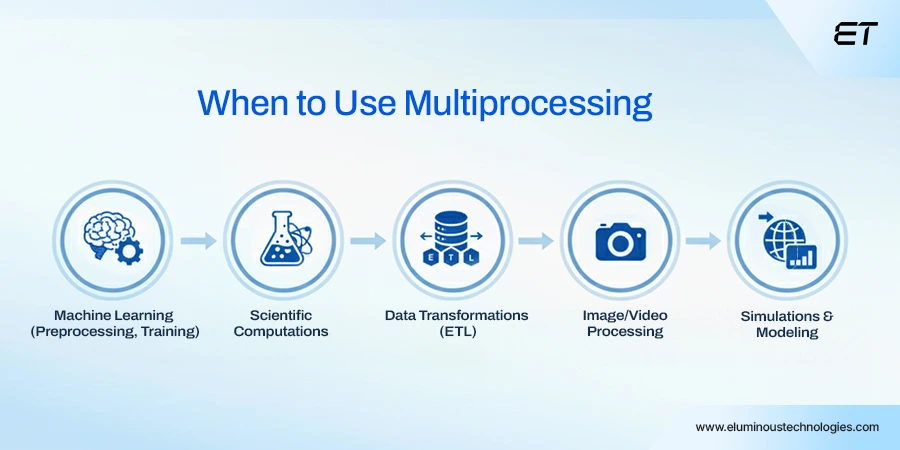

When to Use Multiprocessing

For enterprise systems handling massive computational workloads, from large-scale analytics to machine learning pipelines, performance is often constrained not by I/O but by raw CPU limitations.

When your application is bottlenecked by computation, Python multiprocessing becomes the strategic solution.

Unlike threads that share memory but fight for execution under the Global Interpreter Lock (GIL), multiprocessing creates independent processes that run on separate CPU cores, each with its own interpreter and memory space.

This allows your system to achieve true parallelism, turning multi-core processors into high-efficiency computation engines.

Best for CPU-Bound Workloads

Multiprocessing is ideal for CPU-intensive operations, where your tasks perform heavy calculations, data processing, or transformations, rather than waiting for network or disk I/O.

Let’s explore enterprise-specific contexts where multiprocessing delivers measurable ROI and performance impact:

Image and Video Processing Pipelines

In industries like digital media, retail analytics, and surveillance systems, massive image or video datasets are processed daily for tagging, filtering, or frame analysis.]

A 2024 benchmark by AWS MediaConvert Labs showed that using Python’s multiprocessing.Pool for image transformation workloads on a 16-core machine delivered a 6.8× speedup compared to a single-threaded approach, while maintaining consistent memory efficiency through process pooling.

Machine Learning Preprocessing and Inference

Before machine learning models can train or infer, large datasets must undergo preprocessing, encoding, normalization, and feature extraction.

A 2025 Paperspace benchmark revealed that parallelizing ML preprocessing across 8 CPU cores achieved a 4.3× improvement in data preparation speed, cutting preprocessing from 90 minutes to under 25.

Numerical and Scientific Computations

Industries such as finance, logistics, and scientific research often rely on complex mathematical operations, including regression analysis, matrix multiplication, and optimization algorithms.

Multiprocessing distributes these computations across all available CPU cores, drastically reducing execution times.

Simulations and Modeling

Enterprise systems that rely on predictive modeling, such as Monte Carlo simulations, risk analysis, or demand forecasting, greatly benefit from multiprocessing. Since each simulation run operates independently, they can be distributed across multiple cores for near-linear performance scaling

Data Transformation and Aggregation Pipelines

Large-scale data pipelines, especially in analytics and BI systems, require repetitive transformation and aggregation operations. Multiprocessing enables the parallel execution of data cleansing and transformation steps, allowing for faster data refresh cycles.

Companies like Snowflake, Databricks, and AWS Glue internally parallelize transformation steps using similar multiprocessing paradigms under their distributed frameworks.

When to Avoid Multiprocessing

While multiprocessing is ideal for computation-heavy tasks, it’s not well-suited for I/O-bound workloads, such as web scraping, API calls, or file reading, where the system spends more time waiting than computing.

The reason lies in overhead: each process runs its own interpreter and memory space, which can consume significant system resources when tasks are lightweight.

Enterprise Takeaway

Use multiprocessing when your organization’s workloads are computation-heavy and CPU-constrained, where optimizing for parallel execution yields measurable business gains in processing time, cost, and scalability.

In a modern enterprise data stack, the most efficient architectures often combine both models strategically:

- Threads handle the network-bound I/O layer.

- Processes handle the CPU-heavy computation layer.

In essence:

Use multiprocessing when performance means computation.

Use multithreading when performance means responsiveness.

Threading vs Processing: Key Differences (Python Multithreading vs Multiprocessing)

| Feature | Multithreading | Multiprocessing |

| Execution | Concurrent (one at a time due to GIL) | True parallel (multiple CPUs) |

| Best for | I/O-bound tasks | CPU-bound tasks |

| Memory space | Shared among threads | Separate per process |

| Communication | Easy, via shared variables | Harder, requires IPC or Queues |

| Overhead | Low | High |

| Startup time | Fast | Slower |

| Failure impact | A crash can affect all threads | A crash only kills one process |

| GIL restriction | Yes | No |

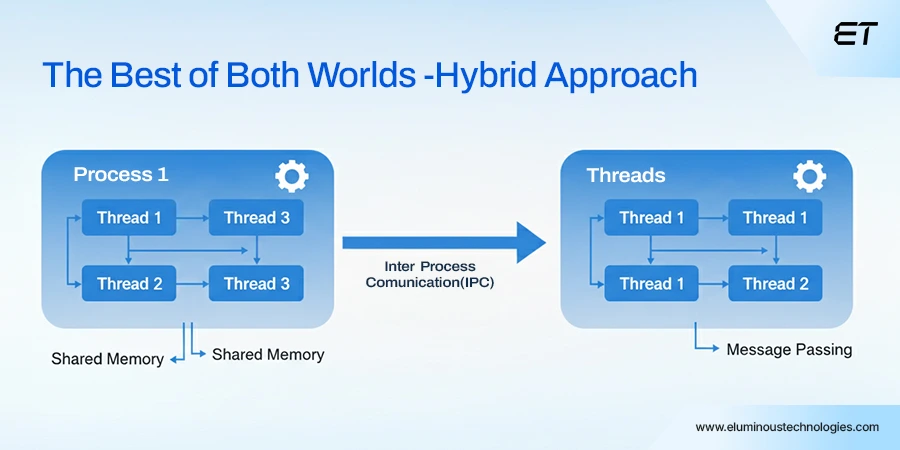

The Best of Both Worlds is the Hybrid Approach

Real-world systems often require both python multithreading vs multiprocessing strategies. Workloads are rarely purely I/O-bound or purely CPU-bound. For example, a data pipeline might:

- Download data from APIs (I/O-bound)

- Process and analyze it (CPU-bound)

- Store results in a database (I/O-bound again)

A hybrid approach, combining threading and multiprocessing, can deliver the best performance.

For instance:

- Use threads for downloading files concurrently.

- Use a process pool for CPU-heavy analysis.

- Use threads again for database writes.

Here’s a simplified hybrid structure:

from multiprocessing.pool import ThreadPool, Pool

import requests, time

urls = ["https://example.com/data1", "https://example.com/data2"]

def download(url):

return requests.get(url).text

def analyze(data):

return sum(len(word) for word in data.split())

start = time.time()

with ThreadPool(5) as tpool:

data_list = tpool.map(download, urls)

with Pool(4) as ppool:

results = ppool.map(analyze, data_list)

print(f"Results: {results}")

print(f"Total time: {time.time() - start:.2f}s")

This pattern is common in ETL pipelines, data science, and automation frameworks.

Common Pitfalls and Optimization Tips

Even experienced developers make subtle mistakes when implementing concurrency in Python. Here’s how to avoid them:

Avoid using threading for CPU-intensive code: It may appear parallel but runs serially due to the GIL.

Watch out for race conditions: Threads share memory, so always use locks (threading.Lock()) or queues for safe access.

Avoid excessive process creation: spawning hundreds of processes drastically increases memory consumption.

Use concurrent.futures for simplicity.

It provides ThreadPoolExecutor and ProcessPoolExecutor with cleaner syntax.

Example:

from concurrent.futures import ThreadPoolExecutor, ProcessPoolExecutor

Benchmark before deploying: Not all tasks benefit from concurrency. Sometimes sequential execution is just as fast, especially for small datasets.

Which One Should You Choose?: Python Multithreading vs Multiprocessing

In enterprise ecosystems, the real question is not ” Python multithreading vs multiprocessing?” It’s “how do we architect for performance and scalability?” The best solution depends on the nature of your workloads, your infrastructure, and your business goals.

At the enterprise level, efficiency is not just about speeding up execution; it’s about ensuring consistency, fault tolerance, and intelligent resource allocation.

Whether you are optimizing data pipelines, deploying AI workloads, or managing concurrent transactions, the goal is to create systems that perform predictably under pressure.

That’s where eLumnious Technologies comes in. We engineer performance-driven systems built to scale across cores, clusters, and clouds. Our team ensures your applications run faster, smarter, and more reliably with architectures designed for the future of enterprise computing.

Where intelligent engineering meets enterprise performance.