On-Device AI: The Smart Shift Happening Inside Your Phone

You’re on a long flight. No Wi-Fi. No signal bars.

Yet your phone identifies a suspicious mole on your arm. It compares it to thousands of medical images and recommends a follow-up.

No data ever leaves your device. No cloud is involved.

That’s On-Device AI at work. Simply put, it’s how intelligence lives and breathes inside your phone, smartwatch, or tablet.

Get this – mobile apps are learning to think locally. They can process data faster, privately, and with high precision. As a business, this ‘edge intelligence‘ can be a great asset going forward.

So, brace yourself. This blog aims to help you understand the concept of on device AI, its key elements, importance, its use cases, and more.

What Is On-Device AI

In simple terms, On-Device AI means your phone or tablet can think for itself without sending all the data to the cloud.

Instead of your app asking a remote server for answers, it thinks right on the device’s own chip. That chip has enough processing power to run machine learning (ML) models without relying on massive cloud servers.

So, when your phone detects objects in a photo, translates speech in real time, or adjusts camera settings automatically, that’s On Device AI doing its job.

This shift changes the rules of mobile computing. Now, mobile apps can:

- Work even when there’s no network.

- Protect user privacy (since data never leaves the phone).

- React instantly without lag or delay.

- Cut costs by relying less on cloud infrastructure.

In short, on device AI makes devices smarter and self-reliant.

What Makes On-Device AI Possible

The concept of On-Device AI isn’t new. Until a few years ago, running AI models directly on phones sounded unrealistic. The chips were too weak, the models too heavy, and the battery life too precious.

But a mix of hardware innovation and smarter AI engineering has changed the game. Here’s a look at the core elements behind this tech.

1. Smarter Chips Built for AI

Modern smartphones now come with Neural Processing Units (NPUs).

These are small but powerful chips that handle AI tasks efficiently. Samsung, Apple, and Qualcomm are already designing processors specifically tuned for on-device AI workloads. So, they can handle image recognition, translation, voice commands, and much more.

2. Smaller, Faster Models

Through model compression, quantization, and pruning, developers can now squeeze large neural networks into mobile-friendly packages. These models use less memory and power without losing much accuracy.

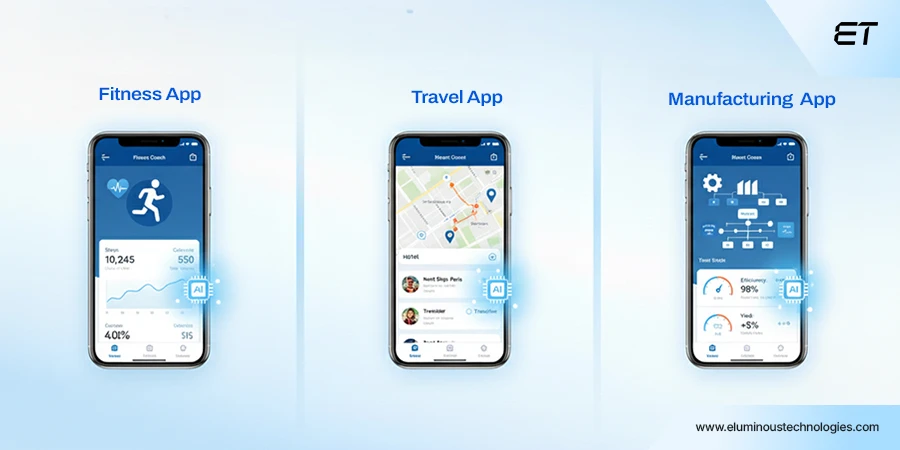

3. AI frameworks made for the Edge

Frameworks like TensorFlow Lite, Core ML, and PyTorch Mobile make deploying on-device AI easier. They bridge the gap between research and real apps, helping developers bring intelligence straight to mobile devices.

4. The Gemini Robotics AI Model On-device

A major leap came when Google showcased the Gemini Robotics AI model running on-device. It proved that even complex, multimodal intelligence could fit into compact hardware.

Basically, it means that if advanced models like Gemini can run locally, your next-gen app definitely can.

Together, these advances are making it realistic for businesses to move parts of their intelligence from data centers to the devices people already use.

Why C-Level Leaders Should Pay Attention to On-Device AI

As enterprises chase faster decisions, tighter data security, and efficient operations, On-Device AI sits at the intersection.

This tech changes how you build products, how customers interact, and how companies control costs. So, if you’re leading a digital-first business, here’s why this shift deserves your attention.

Faster Decisions, Real-Time Advantage

Executives love data. But they hate waiting for it.

Today, even a few seconds of lag between input and insight can decide whether a customer stays or bails. This delay could also impact whether a technician fixes a problem on-site or has to return later. That lag (in most cases) comes from one place: the cloud.

On device AI changes that. By moving intelligence to the device, your systems can process information instantaneously (without remote servers).

Examples:

- A retail associate scanning a product and instantly seeing restock suggestions.

- A logistics driver getting route optimizations offline.

- A healthcare device analyzing patient vitals in real time.

This is ‘speed’ as a strategic advantage.

All in all, the companies that embrace On-Device AI will operate in the present, not in hindsight.

Stronger Privacy, Greater Trust

You know this: one privacy breach can cost more than any marketing campaign can fix. (Remember the Equifax data breach?)

Luckily, On-Device AI changes the scene. Here’s how:

- When AI processing occurs locally, sensitive data (such as faces, voices, health metrics, or location details) remains on the device. That instantly reduces your attack surface and data liability.

- For industries like finance, healthcare, and retail, on-device AI can be a great choice.

But there’s a deeper play here: transparency.

When customers know your product processes their data privately, they’re more willing to engage, share, and stay loyal. In short, companies that bake privacy into their technology architecture today can edge ahead.

Lower Costs, Higher Efficiency

As your apps scale, so do your inference requests. Interactions, such as photo analysis, voice processing, and recommendations, are sent to the cloud.

The outcome? High computing costs and a rise in latency. In addition, a great user base becomes a multiplying factor in terms of expenses.

On-Device AI flips that equation. By moving intelligence closer to the user, you reduce your dependence on central cloud processing.

So, you can experience:

- Fewer server calls.

- Less data transfer.

- Lower bandwidth consumption.

- Smaller recurring cloud expenses.

When devices handle part of the workload, your infrastructure doesn’t stumble under user growth. So, your app can expand to new regions or user bases without impacting your cloud budget.

In other words, on-device AI can lead to smarter economics. It lets you reinvest savings from infrastructure into innovation.

New Experiences, New Business Models

Every major tech shift starts the same way:

- First, it saves time.

- Then, it creates money.

On-Device AI is now at that second stage. It speeds up apps, protects data, and has the potential to unlock new revenue streams.

Picture this:

- A fitness app that learns your habits offline and gives personalized feedback.

- A travel app that translates street signs, menus, and conversations locally.

- A factory inspection app that spots defects in machinery in real-time using just a smartphone camera.

These business models are built on one core advantage: intelligence that lives where your users are.

This benefit means product differentiation without the dependency tax. Basically, you’re creating products that work everywhere, scale faster, and feel more human to the end user. So, while competitors are still investing in cloud capacity, you can build experiences that thrive beyond it.

Strategic Resilience, Future Readiness

Relying too much on central systems is risky business. Why? Here are some factors:

- Cloud outages

- Network issues

- Escalating computing costs

On-Device AI distributes intelligence across devices. So, your business becomes less vulnerable to single-point failures. Even if your servers stumble or connectivity drops, users still get full-featured AI-powered experiences. Because the brainpower sits right there on the device.

This tech can:

- Protect your operations from unpredictable disruptions.

- Reduce your dependency on third-party cloud vendors.

- Ensure compliance across regions with different data laws.

And as more enterprises explore Gemini Robotics AI models and other on-device architectures, the benefits can multiply.

How to Build an On-Device AI Product

Building an On-Device AI product implies redesigning how your intelligence pipeline works. Here’s a step-by-step breakdown of the process.

1. Define What Needs to Run On-Device

Not everything belongs on the edge. You first need to map the AI workload.

Separate what must happen locally from what can stay in the cloud.

For example:

- A mobile health app might run vitals analysis on-device but push anonymized patterns to the cloud.

- A factory inspection app can process defect detection locally but update global dashboards periodically.

This hybrid intelligence design makes on-device AI scalable. You get the speed and privacy benefits without overburdening the device.

Pro tip: Start small. Pick one key function that would improve user experience if it were instantaneous or offline.

2. Choose the Right Model Architecture and Frameworks

Once you’ve identified what should run locally, the next step is to choose an AI architecture that can operate outside the cloud.

Modern on-device AI products rely on lightweight yet powerful model families, like:

- Gemini Nano/Gemini Robotics AI models for multimodal, context-rich tasks.

- MobileBERT and TinyML for NLP or smaller inference tasks.

- EfficientNet-Lite for computer vision that needs to be both fast and battery-friendly.

These models can compress computation without affecting performance.

They can run on consumer hardware due to the following components:

- Apple’s Neural Engine (ANE)

- Qualcomm’s Hexagon DSP

- Google’s Tensor Processing Unit (TPU)

- MediaTek’s APU

All in all, your hardware-software alignment is your competitive edge. So, involve your AI architects early and ensure product, hardware, and ML teams are synced from Day 1.

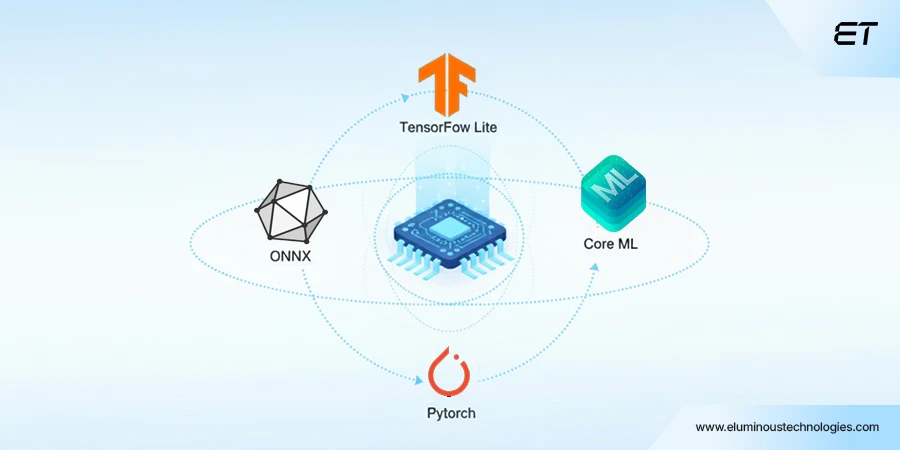

3. Optimize Data Pipelines for Edge Learning

Without the right data strategy, even the smartest on-device model is just a static brain.

Unlike cloud-based AI, on-device AI learns differently. It doesn’t rely on a single, centralized database; it learns locally.

Instead of sending raw user data to the cloud, each device trains a lightweight version of the model using its own data. Only the model updates (not the actual data) are sent back, aggregated, and redistributed.

But to make this system work, your data pipelines must evolve.

- You’ll need to define what stays local and what’s aggregated.

- You’ll need to encrypt update channels for secure model synchronization.

- And you’ll need clear consent frameworks that make your data ethics transparent.

In short, the smarter your data movement strategy, the longer your on-device AI will stay useful and compliant.

4. Test and Deploy with Edge Constraints in Mind

When intelligence moves to the edge, it’s dealing with some challenges, like:

- Limited battery life

- Fluctuating connectivity

- Thermal constraints

- Unpredictable user behavior

That’s why what works perfectly in your cloud lab can crumble in your user’s hand.

The solution? Edge-aware testing and deployment.

Your teams need to simulate real-world conditions before rolling out an update.

This means:

- Running A/B tests across diverse devices (not just the flagship ones).

- Tracking inference latency, energy draw, and thermal load as KPIs.

- Using model quantization and pruning to shrink models without gutting their intelligence.

Think of this tech as DevOps meets MLOps, adapted for the edge.

Bottom line: You should maintain a living, breathing system that must thrive in imperfect environments.

5. Build a Scalable Ecosystem and Maintenance Loop

Unlike cloud models that you can update centrally, every on-device deployment lives in the wild.

You’ve got thousands (or millions) of devices running slightly different hardware, OS versions, and usage patterns. So, if you don’t plan for that diversity, your product becomes outdated faster.

That’s why you should treat on-device AI as an ecosystem.

Here’s what you should focus on:

- Over-the-Air (OTA) Model Updates: Regularly push optimized models, security patches, and new capabilities without forcing app reinstallations.

- Edge Analytics Loop: Gather anonymized performance data (latency, accuracy, energy metrics) from user devices to fine-tune future versions.

- Cross-Team Collaboration: Align data scientists, app developers, and product managers from day one.

- Lifecycle Governance: Maintain version control for every deployed model.

With Gemini Robotics AI models and other adaptive architectures maturing, maintaining edge-based intelligence will soon be as seamless as updating your OS.

Key Use Cases of On-Device AI Across Industries

By bringing computation closer to data generation, you can unlock faster responses, stronger privacy, and more resilient operations.

Here’s a quick snapshot of how different sectors put edge intelligence to work:

| Industry | Example Application | How On-Device AI Adds Value | Key Outcome |

| Healthcare | Wearables detecting irregular heartbeats in real time | Processes biometric data locally, reducing latency and privacy risk | Faster diagnosis and better patient safety |

| Automotive | Driver-assist systems (object detection, lane tracking) | Runs AI inference directly on vehicle hardware | Instant response and safer driving decisions |

| Retail | In-store recommendation apps and visual product search | Uses edge intelligence to personalize offers offline | Improved customer engagement and sales conversion |

| Manufacturing & Robotics | Defect detection on assembly lines using smart cameras | Employs Gemini Robotics AI models for multimodal reasoning | Reduced downtime and quality control efficiency |

| Consumer Tech | Smartphones with offline translation and voice assistants | Uses NPUs/TPUs for low-latency, privacy-first inference | Faster experiences and higher user satisfaction |

What’s striking here is the pattern: once intelligence moves closer to the user or device, experiences stop depending on connectivity.

That’s why industries adopting on-device AI first can redefine customer expectations.

For example, Apple’s latest M-series chips integrate a high-performance Neural Engine that runs AI inference directly on-device. This implies that you can access everyday features like image processing, transcription, and personal context suggestions instantly, without sending data to the cloud.

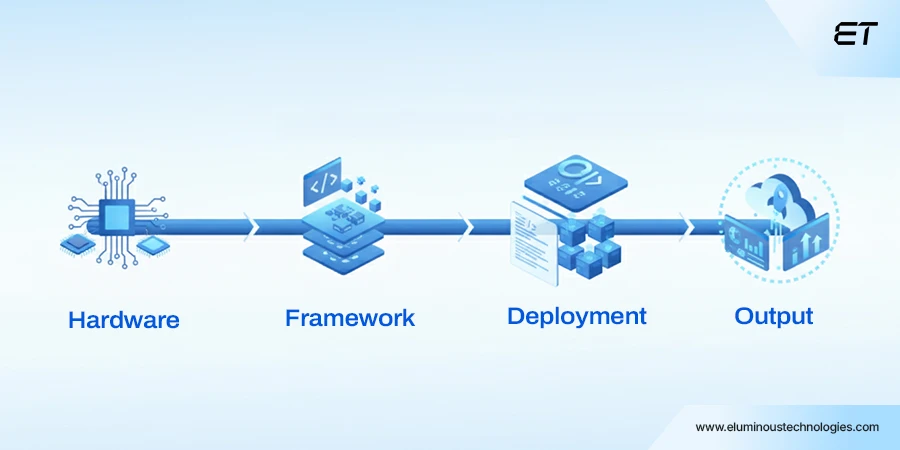

The Tech Stack Behind On-Device AI

For on-device AI to work seamlessly, the hardware, software, and model architecture must play in perfect sync.

Let’s break down the three pillars of the on device AI tech stack.

1. The Hardware Layer

At the core of on device AI is specialized hardware built to handle neural workloads efficiently.

These chips process machine learning inference locally, without draining the battery or overheating the device.

Key players include:

- Apple Neural Engine (ANE): Powers Siri and on-device image recognition.

- Google Tensor SoC: Optimized for Pixel’s AI features, from voice typing to photo enhancements.

- Qualcomm Hexagon DSP & AI Engine: Enables AI acceleration across Android smartphones and IoT devices.

- MediaTek APU & Samsung Exynos AI Processor: Deliver edge inference for consumer and industrial devices.

In simpler terms, these processors make your device smart enough to think without connecting to the cloud.

2. The Software and Framework Layer

Software frameworks bridge the gap between your AI models and the underlying hardware. They allow developers to shrink massive cloud-trained models into mobile-friendly formats.

Popular frameworks include:

- TensorFlow Lite: Google’s go-to for optimizing deep learning models for mobile and embedded systems.

- Core ML: Apple’s framework for running models efficiently across iOS devices.

- PyTorch Mobile: Favored for flexibility and open-source control.

- ONNX Runtime Mobile: Ideal for cross-platform deployment with minimal latency.

And for robotics and multimodal intelligence, Gemini Robotics AI models are gaining traction. Together, these frameworks form the software nervous system that powers edge intelligence.

3. The Deployment and Optimization Layer

Even with great hardware and software, on-device AI lives or dies by the quality of deployment. That’s where model compression, quantization, and pruning techniques come in.

Supporting tools like TensorRT, Apache TVM, and Edge Impulse help automate optimization pipelines, ensuring consistent performance across devices.

Overall, your AI initiative is only as strong as its ecosystem. Choosing the right combination of chip, framework, and optimization pipeline is a crucial decision.

Challenges and Trade-offs in On-Device AI

While on-device AI promises speed, privacy, and autonomy, those benefits come with their own set of trade-offs. Here are the big ones:

Hardware Fragmentation

Different devices, chipsets, and OS versions mean your AI model might perform beautifully on one smartphone and glitch on another.

So, maintaining consistent performance across hundreds of device variants is a challenge.

Strategic takeaway: Prioritize your target devices and build gradually outward, instead of chasing full compatibility on day one.

Model Compression vs. Accuracy

To make models run locally, teams use techniques like pruning and quantization.

But if you compress too much, the accuracy can dip. Keep it too heavy, and the device overheats or drains fast.

Strategic takeaway: Your optimization decisions are trade-offs between performance, energy efficiency, and user experience.

Development Overhead and Talent Gap

On device AI requires specialized expertise across ML, hardware optimization, and mobile engineering. Most organizations underestimate this learning curve and overspend in trial-and-error cycles.

Strategic takeaway: Partner with chipset vendors, AI software development providers, or system integrators early on.

Data Privacy and Compliance Complexity

Ironically, while on-device AI improves privacy by keeping data local, it also introduces new compliance questions. Some of them include federated learning, consent tracking, and model update transparency.

Strategic takeaway: Make compliance a part of your design principle.

Maintenance and Monitoring at Scale

After deployment, millions of devices will run slightly different model versions and environments. So, updating and monitoring performance is challenging.

Strategic takeaway: Adopt a continuous optimization mindset. Build MLOps systems that track on-device behavior.

The Future of On-Device AI: Where Intelligence Truly Belongs

We’re entering an interesting phase of artificial intelligence. And on device AI is a great example. From smartphones to cars, wearables to industrial sensors, this tech has the potential to shift from novelty to necessity.

The emergence of Gemini Robotics AI models is a clear signal of what’s next.

As data privacy, latency, and autonomy take center stage, your organization’s AI maturity will depend on how effectively you push intelligence to the edge.

And the businesses that understand this shift early can own the next decade of user trust, performance, and innovation. So, consider the core pillars, plan your project, and hire AI developers in case you have a talent crunch.

Discover how on-device AI can redefine your digital ecosystem.

Frequently Asked Questions

1. What is On-Device AI, and how does it differ from cloud AI?

On-device AI runs machine learning models directly on smartphones, wearables, or IoT devices instead of relying on cloud servers. It reduces latency, enhances privacy, and enables real-time intelligence.

In contrast, cloud AI processes data remotely, which can slow down responsiveness and raise data security concerns.

2. Why should enterprises invest in ‘On Device AI?’

On device AI can lead to better user experiences, stronger data privacy compliance, and lower operational costs. It has the potential to reduce dependence on cloud infrastructure, making businesses more resilient and agile.

3. How does the Gemini Robotics AI model fit into On-Device AI?

The Gemini Robotics AI model represents the next generation of multimodal AI capable of handling text, vision, and sensor data locally. Its on-device capability allows for smarter robotics, autonomous systems, and real-time decision-making without cloud dependence.

4. What are the first steps to building an On-Device AI product?

Start by identifying a clear edge use case, choosing compatible hardware, and selecting the right frameworks. Then, use model optimization techniques to ensure efficient performance across devices.