Making AI-Powered Development Security Work for You

In the rush to adopt AI, many teams focus on speed and innovation. But often, security takes a back seat. The reality is stark: AI systems, by their very nature, introduce new weaknesses. From sensitive data leaks to model manipulation, the risks are real and growing.

This guide is about AI-powered development security. You’ll find actionable ways to implement some of the most practical activities. After going through the blog, you’ll learn how to identify risks, integrate security into your AI workflows, and build resilient systems.

Think of this blog as a roadmap for building AI responsibly. By the end, you’ll see that securing AI is in fact a deliberate approach.

From RAG to auto test scripts and more, our AI developers are equipped to assist you.

Understanding Security Challenges in AI Development

AI doesn’t break like traditional software. It’s vulnerable, and attackers can manipulate it.

Get this – the attack surface of an AI system isn’t limited to code. It extends to:

- Data

- Models

- APIs

- User behavior

That’s what makes securing artificial intelligence a complex task.

So, you should first be wary of the most common security blind spots in AI-powered development.

Data Poisoning and Manipulation

Machine learning models are only as trustworthy as their training data. If attackers manage to inject malicious or misleading data into your datasets, your entire model can start producing unreliable outcomes.

Example: A fraud detection model trained with poisoned data might start labeling fraudulent transactions as safe.

Model Inversion and Extraction Attacks

These are like AI phishing attacks. Hackers query your model repeatedly until they can infer sensitive information about its training data. In some cases, they can even replicate the model itself.

In sectors like healthcare or finance, this threat could mean leaking personal or confidential information through model outputs.

Need to boost your front-end? Our blog enlists the threats and mitigation tactics.

Adversarial Inputs

An adversarial input is a slightly modified piece of data designed to trick the model. For instance, a few pixel tweaks in an image can cause an AI vision system to misclassify a stop sign as a speed-limit sign.

This result is not great if you’re building self-driving software.

Third-Party and Supply Chain Risks

Most AI systems rely on the following:

- Open-source libraries

- APIs

- Pre-trained models

While these sources save time, they also inherit vulnerabilities from unknown contributors or outdated dependencies.

Once you identify these risks early, you can design controls to prevent ruinous breaches later.

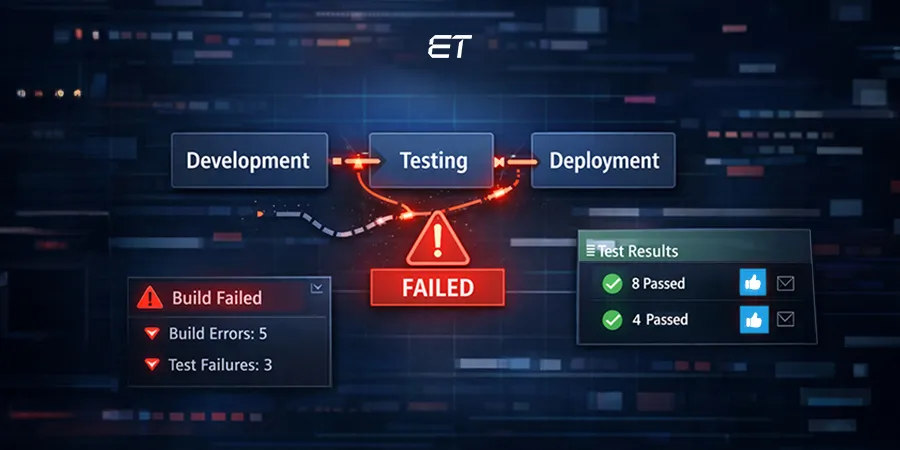

Building Security into the AI Lifecycle

Most teams treat AI-powered development security like a final QA step. However, that’s a dangerous approach.

In reality, AI security has to live and breathe through every stage of your development lifecycle. Otherwise, you’ll just chase vulnerabilities instead of preventing them.

Here’s how to embed security at every phase of your AI pipeline.

Secure Data Collection and Preparation

The AI lifecycle starts with data. Unfortunately, it also happens to be its weakest link. So, you should:

- Validate data sources: Use verified, auditable data pipelines. Avoid scraping unverified datasets.

- Apply encryption: Encrypt data both at rest and in transit. Mask personally identifiable information (PII) before it ever touches the model.

- Implement access control: Only authorized roles should access raw datasets. You should rotate credentials regularly and use logging to monitor data interactions.

Pro tip: Incorporate privacy-preserving machine learning (ML) techniques to enhance data security and privacy. Using them, you can minimize data exposure during model training. Here’s a good article to explore if you want more details on this topic.

Model Training and Validation Security

Simply put, AI systems inherit biases or hidden vulnerabilities during model training. Here’s how you secure AI-powered development at this stage:

- Threat modeling: Identify potential attack vectors during model design (e.g., data poisoning, inversion, or backdoor attacks).

- Sandbox your training environment: Use isolated environments or containers to prevent external interference.

- Validation checkpoints: Continuously test the model during training to detect anomalies or drift that may indicate compromised data.

All in all, if your model’s learning process isn’t secure, everything downstream inherits that weakness.

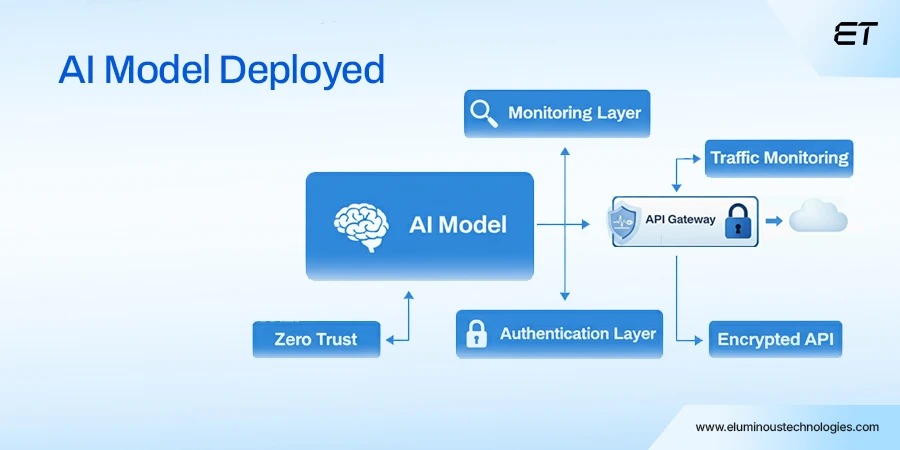

Secure Model Deployment

Deploying an AI model is like opening a gate. Once it’s live, it’s a target. So, to boost security, you can:

- Use API gateways: Protect model endpoints with authentication, throttling, and encryption.

- Monitor API traffic: Look for abnormal query patterns that may indicate an attempted extraction.

- Harden the container: Regularly update dependencies and remove unused packages to minimize exposure.

Also, you can implement a zero-trust architecture where every interaction requires validation.

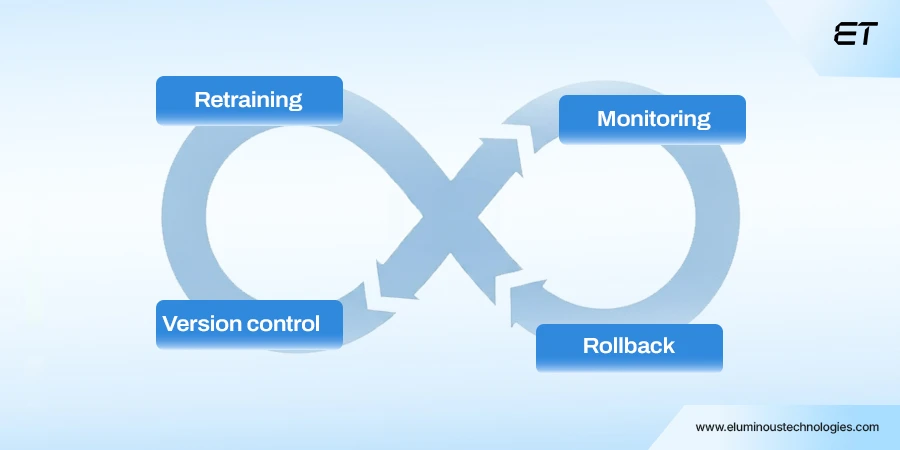

Continuous Monitoring and MLOps Integration

AI-powered development security shouldn’t end at deployment. It should evolve as the model does.

This is where MLOps security becomes crucial. You should:

- Automate retraining with verified data sources only.

- Monitor for data drift, performance drops, or unusual inputs.

- Integrate version control for both data and models to ensure safe rollbacks in the event of a breach.

Overall, by embedding security controls directly into your MLOps pipeline, you turn security into a continuous process.

MLOps is different than DevOps in many aspects. Read our guide that explains and compares the two concepts.

Data Security and Privacy in AI Systems

Every dataset an AI system touches has potential privacy, compliance, and ethical risks. A single mismanaged dataset can expose your entire reputation as an organization.

So, you should form a secure data strategy in AI-powered development. Here are the main steps to consider.

1. Protect Data at Every Touchpoint

From collection to training to inference, you should encrypt and anonymize data. Ensure you establish proper access controls as well. Consider the following practical tips:

- Encryption: Use AES-256 or stronger encryption both at rest (storage) and in transit (APIs, network traffic).

- Anonymization: Remove identifiers wherever possible.

- Access Control: Implement role-based permissions and audit trails to monitor who interacts with the data.

Example: A healthcare AI model trained on anonymized data reduces re-identification risks while staying compliant with HIPAA or GDPR.

2. Adopt Privacy-Preserving Machine Learning (PPML)

Techniques under the PPML umbrella allow you to train or use AI models without exposing raw data. Some powerful approaches include:

- Federated Learning: You train models locally on devices. Then, you can aggregate them. This tactic ensures sensitive data never leaves the source.

- Differential Privacy: In this technique, you should add controlled noise to datasets or model outputs. This method obscures individual data points without compromising patterns.

- Homomorphic Encryption: This PPML tactic allows computation on encrypted data. So, even the model doesn’t get in-depth access to the actual content all the time.

These methods are quickly becoming the gold standard for AI-powered development, security, and protection in regulated industries.

3. Compliance and Governance

AI systems are increasingly under scrutiny from regulators, particularly in industries such as healthcare, finance, and education.

So, a standard practice is to stay aligned with GDPR, CCPA, HIPAA, and ISO/IEC 23894 regulations. Here are some additional pointers to consider:

- Conduct Data Protection Impact Assessments (DPIAs) before launching new AI products.

- Maintain an AI data governance framework.

- Track data lineage (origin, usage, and data purge)

Without this step, you can’t prove compliance or accountability.

Speaking of compliance, the latest EU AI Act is here. Our blog covers all its crucial details.

4. Human Oversight and Transparency

Security in AI is cultural. This aspect means you should develop an AI-ready work culture.

- Ensure developers, data scientists, and compliance officers work together with visibility into the full data lifecycle.

- Keep humans in the loop for sensitive decisions.

- Use explainable AI (XAI) models whenever possible, allowing you to justify outcomes during audits.

Transparency strengthens user trust and reduces the impact of potential breaches.

A secure AI ecosystem respects privacy by design, enforces compliance by default, and ensures no one oversteps its boundaries.

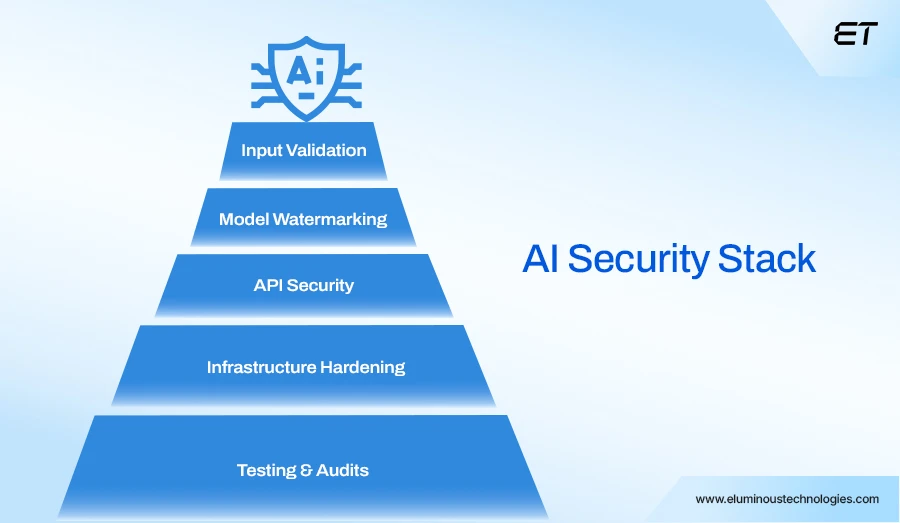

Threat Mitigation Strategies for AI-Powered Development Security

Understanding risks is one thing; defending against them is another. Here’s how you can strengthen your AI-powered development security with proven mitigation strategies.

1. Validate Inputs and Detect Anomalies

AI systems learn from data. But if the data is incorrect, they can falter. To prevent adversarial or malicious inputs, you should implement the following points:

- Use strict input validation rules across all endpoints.

- Integrate anomaly detection systems that flag outlier data patterns.

- Continuously retrain detection models with verified data to adapt to new attack types.

This approach ensures your AI doesn’t accept or act on tampered information.

2. Watermark and Monitor Models

Model theft and replication are rising threats in AI-powered development. To counter them:

- Add invisible watermarks or fingerprints to your model outputs.

- Track model usage and detect unauthorized API calls.

- Maintain version control and usage logs to quickly identify and trace potential leaks.

Simply put, model watermarking strengthens overall AI security posture.

3. Secure API Keys and Endpoints

APIs are the front door to your AI models. This aspect also makes them prime targets for attack.

To keep them secure:

- Rotate API keys regularly and avoid embedding them in client-side code to maintain security.

- Implement OAuth 2.0 or token-based authentication.

- Use rate limiting and throttling to block brute-force or model extraction attempts.

All in all, your goal is to make unauthorized access practically impossible.

Need help securing your APIs? Our team of 20+ QA Engineers is ready for your project.

4. Harden Your Infrastructure

Security isn’t just about the model. It’s also about where it lives. Here’s your action plan:

- Regularly patch servers and dependencies.

- Limit permissions in your cloud environment to follow the principle of least privilege.

- Use container scanning tools (like Trivy or Clair) to detect vulnerable packages early.

Remember, a secure foundation makes every layer of your AI ecosystem more resilient.

5. Test, Audit, and Improve

AI security isn’t static. You should constantly test your defences the way attackers would.

-

- Conduct regular penetration testing and red-teaming exercises.

- Perform bias and vulnerability audits before every major release.

- Document and review incidents to strengthen future AI-powered development security strategies.

Think of this as continuous improvement for your AI defences.

Do you know the concept of code audit? Get detailed info about the types, focus areas, process, and more in this resource.

Real-World Case Studies in AI-Powered Development Security

The best way to understand the importance of AI-powered development security is to see it in action. In this section, we’ll shed light on an interesting AI security study.

How Vercel Secured Its AI SDK Playground

Vercel’s AI SDK Playground, designed to help developers test and integrate AI models, experienced a surge in malicious activity shortly after its launch. The open interface made it an easy target for bot abuse, prompt injection, and Denial-of-Service (DoS) attacks.

At one point, 84% of Vercel’s traffic was automated bot traffic. A huge cause of concern, right?

To regain control, they partnered with Kasada, a cybersecurity firm specializing in AI application security. Together, they implemented:

- Advanced bot detection and filtering

- Strict rate limiting on API endpoints

- Prompt injection detection

- Enhanced authentication mechanisms

The result? A massive drop in malicious traffic and a measurable improvement in application stability and response time.

This case proves that securing the entry points of AI systems is as critical as protecting data or models. Without these defences, even the smartest AI can be brought to its knees. You can read this intriguing case study to dig deeper.

Microsoft EchoLeak Incident: A Lesson in AI-Powered Development Security

In 2025, a vulnerability named EchoLeak (CVE-2025-32711) rocked the enterprise AI world. Researchers discovered a zero-click prompt injection exploit in Microsoft 365 Copilot. Here, attackers could send a single malicious email that silently triggered Copilot to leak sensitive internal data.

The attack slipped through multiple layers of Microsoft’s defenses, including their XPIA classifier and link-redaction systems. In short, the exploit used the AI’s own logic against itself.

Here’s why this incident is a wake-up call for anyone building AI systems:

- AI doesn’t just process data; it interprets instructions. Even a harmless-looking email can become a vector for malicious prompts.

- Security in AI isn’t about hardening endpoints alone.

The more integrated your AI becomes with enterprise data and tools, the greater your exposure. Here’s the link to this incident.

Building a Secure Future for AI-Powered Development

AI is no longer just a tool; it has become a core part of modern software development. With that power comes responsibility. As we’ve seen through real-world examples (from Vercel’s AI SDK

Playground to Microsoft’s EchoLeak incident), security gaps in AI workflows can have far-reaching consequences.

The key takeaway is straightforward: AI-powered development security must be a vital facet of the AI lifecycle. From collecting and validating data, training and testing models, to deploying and monitoring them in production, security must be deliberate, continuous, and proactive.

By embedding strong security practices, monitoring intelligently, and learning from real incidents, you can use AI confidently. For professional-grade assistance, you can always explore our AI development services and hire our experts.

Over two decades, we’ve tested 250+ projects. Now, with AI on the rise, we have a dedicated team of 15+ AI developers ready to augment your staff.

Frequently Asked Questions

1. What is AI-powered development security?

It’s the practice of protecting AI systems at every stage against attacks and vulnerabilities. This strategy ensures your AI remains safe, reliable, and compliant.

2. Why is securing AI-powered development important?

AI systems handle sensitive data and make critical decisions. They are an attractive target for attacks. Proper security prevents breaches, protects intellectual property, and keeps users’ trust.

3. What are common threats to AI-powered systems?

The most prominent AI threats are data poisoning, model theft, adversarial inputs, and API vulnerabilities. You should identify these early and embed security throughout the AI lifecycle.

4. How can I implement AI-powered development security in my projects?

Integrate security at every stage: secure data, encrypt models, validate inputs, and monitor deployed systems. Remember, continuous vigilance turns security from a checklist into an ongoing practice.